Robotics & UAVs

Robotics and Unmanned Aerial Vehicles (UAVs)

We believe that drones and unmanned air vehicles will play an important role in the future. Their domain of application ranges from object delivery for humanitarian reasons, find and rescue operations in harsh environments, ensuring safety of the public, and in general providing an ability to sense and interact with the environment in ways that were never through before. Towards this goal, developing autonomous drones that do not require any infrastructure and can “sense” and “interact” with the environment as it is required is of paramount importance.

Our group works towards autonomous drones, with special interest in the development of the computation engines that would enable the drone to operate autonomously. Key challenges to be addressed are the large computation requirements that are required from the existing algorithms (i.e. navigation, 3D reconstruction of the environment, machine learning algorithms), combined with low power restrictions.

Learning to Fly by MySelf: A Self-Supervised CNN-based Approach for Autonomous Navigation

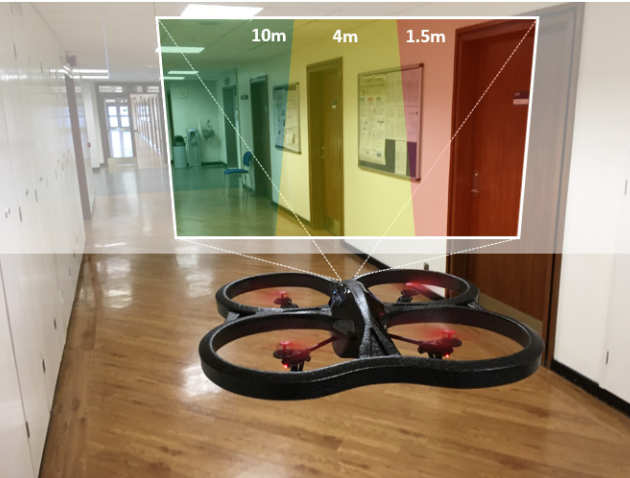

Nowadays, Unmanned Aerial Vehicles (UAVs) are becoming increasingly popular facilitated by their extensive availability. Autonomous navigation methods can act as an enabler for the safe deployment of drones on a wide range of real-world civilian applications. In this work, we introduce a self-supervised CNN-based approach for indoor robot navigation. Our method addresses the problem of real-time obstacle avoidance, by employing a regression CNN that predicts the agent's distance-to-collision in view of the raw visual input of its on-board monocular camera. The proposed CNN is trained on our custom indoor-flight dataset which is collected and annotated with real-distance labels, in a self-supervised manner using external sensors mounted on an UAV. By simultaneously processing the current and previous input frame, the proposed CNN extracts spatio-temporal features that encapsulate both static appearance and motion information to estimate the robot's distance to its closest obstacle towards multiple directions. These predictions are used to modulate the yaw and linear velocity of the UAV, in order to navigate autonomously and avoid collisions.

Nowadays, Unmanned Aerial Vehicles (UAVs) are becoming increasingly popular facilitated by their extensive availability. Autonomous navigation methods can act as an enabler for the safe deployment of drones on a wide range of real-world civilian applications. In this work, we introduce a self-supervised CNN-based approach for indoor robot navigation. Our method addresses the problem of real-time obstacle avoidance, by employing a regression CNN that predicts the agent's distance-to-collision in view of the raw visual input of its on-board monocular camera. The proposed CNN is trained on our custom indoor-flight dataset which is collected and annotated with real-distance labels, in a self-supervised manner using external sensors mounted on an UAV. By simultaneously processing the current and previous input frame, the proposed CNN extracts spatio-temporal features that encapsulate both static appearance and motion information to estimate the robot's distance to its closest obstacle towards multiple directions. These predictions are used to modulate the yaw and linear velocity of the UAV, in order to navigate autonomously and avoid collisions.

Experimental evaluation demonstrates that the proposed approach learns a navigation policy that achieves high accuracy on real-world indoor flights, outperforming previously proposed methods from the literature.

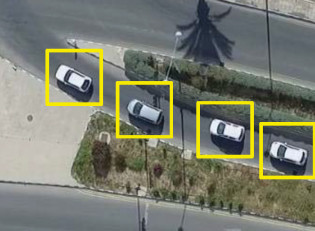

CNN-based Real-Time Object Detection on UAV Images

Unmanned Aerial Vehicles (drones) are emerging as a promising technology for both environmental and infrastructure monitoring, with broad use in a plethora of applications. Many such applications require the use of computer vision algorithms in order to analyse the information captured from an on-board camera. Such applications include detecting vehicles for emergency response and traffic monitoring. In this project we explore the trade-offs involved in the development of real-time object detectors based on deep convolutional neural networks (CNNs) that can enable UAVs to perform vehicle detection under a resource constrained environment such as in a UAV.

UAV Ego-motion Estimation

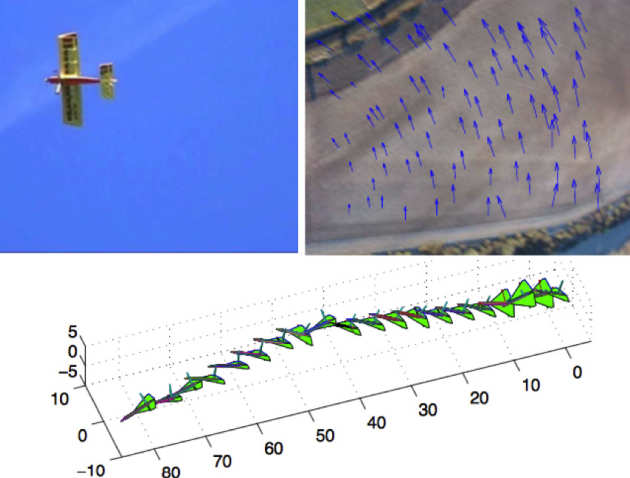

This project focuses on the development of a system that estimates the ego-motion of an unmanned plane using a single camera. Using the video feed captured by the camera, information regarding the translation and rotation of the plane is extracted. Issues the need addressing are the complexity of such module, the imposed real-time constraints, and robustness to noisy video feed. Key to the successful estimation of the ego-motion of the place is the selection of a set of feature points, from which the ego-motion parameters can be extracted. In our work, we demonstrate that by imposing appropriate distance constraints in the feature selection process leads to a significant increase on the precision of the ego-motion parameters. We have developed a real-time FPGA-based ego-motion system which, by utilizing the above distance constraints, has led to a system that achieves high precision in the ego-motion parameter estimation, meeting at the same time the hard real-time constraints.

This project focuses on the development of a system that estimates the ego-motion of an unmanned plane using a single camera. Using the video feed captured by the camera, information regarding the translation and rotation of the plane is extracted. Issues the need addressing are the complexity of such module, the imposed real-time constraints, and robustness to noisy video feed. Key to the successful estimation of the ego-motion of the place is the selection of a set of feature points, from which the ego-motion parameters can be extracted. In our work, we demonstrate that by imposing appropriate distance constraints in the feature selection process leads to a significant increase on the precision of the ego-motion parameters. We have developed a real-time FPGA-based ego-motion system which, by utilizing the above distance constraints, has led to a system that achieves high precision in the ego-motion parameter estimation, meeting at the same time the hard real-time constraints.