A Novel Bronchoscopic Localisation Approach for Lung Cancer Diagnosis

Hamlyn Centre researchers proposed a novel generative localisation approach with uncertainty estimation using video-CT data for bronchoscopic biopsy.

Lung cancer is now the leading cause of cancer-related death world-wide, and an efficient early diagnosis is in high-demand. The bronchoscopic biopsy is an emerging technology for lung cancer diagnosis staging.

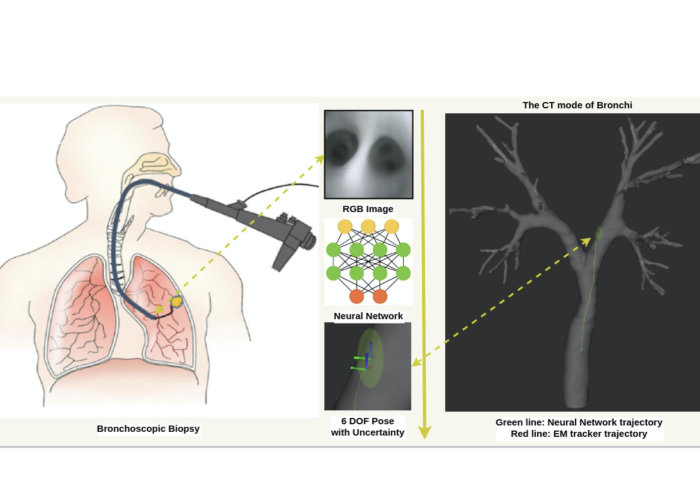

During a bronchoscopic procedure, the physician needs to estimate the intra-operative position and orientation of the scope through the intra-operative 2D image from camera in the coordinates of the preoperative 3D computed tomography (CT) scan.

Accurate localisation for robot-assisted endobronchial intervention

Robot-assisted endobronchial intervention requires accurate localisation based on both intra- and pre-operative data. Most existing methods achieve this by registering 2D videos with 3D CT models according to a defined similarity metric with local features.

Due to the incremental nature of video-CT registration approaches, continuous tracking is likely to be suspended when once registration fails due to paucity of airway features.

A learning-based global localisation using data-driven deep neural networks

To tackle this issue, our researchers at the Hamlyn Centre collaborated with researchers from Oxford Robotics Institute to formulate the bronchoscopic localisation as a learning-based global localisation using data-driven deep neural networks.

Comparing with the conventional 2D/3D geometry registration methods, the new proposed network consists of two generative architectures and one auxiliary learning component.

The cycle generative architecture bridges the domain variance between the real bronchoscopic videos and virtual views derived from pre-operative CT data so that the proposed approach can be trained through a large number of generated virtual images but deployed through real images.

The auxiliary learning architecture leverages complementary relative pose regression to constrain the search space, ensuring consistent global pose predictions.

Most importantly, the uncertainty of each global pose is obtained through variational inference by sampling within the learned underlying probability distribution.

In other words, the proposed method can not only predict the global pose, but also estimate the corresponding uncertainty of each prediction via variational pose generation.

The experiment results verify the resultant improvement of the localisation accuracy and the rationality of uncertainty estimation.

This research was supported by EPSRC Programme Grant “Micro-robotics for Surgery (EP/P012779/1)” and Oxford Robotics Institute. It was published in IEEE Robotics and Automation Letters (Cheng Zhao, Mali Shen, Li Sun and Guang-Zhong Yang, "Generative Localization With Uncertainty Estimation Through Video-CT Data for Bronchoscopic Biopsy", IEEE Robotics and Automation Letters, Jan 2020).

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Erh-Ya (Asa) Tsui

Enterprise