Latest news

The new Audio Experience Design group website is now live! From now on, all updates on my research will be posted there, and not here.

The BUGG website is now live, and you can find there more information about our intelligent real-time eco acoustic monitoring device.

Read about the SONICOM project, which just started!

The last paper of our SAFE Acoustic project has been published! You can find it here. Look at the new SAFE Acoustic website (acoustics.safeproject.net), which allows everyone to listen to the various recordings done in the past years in the BORNEO forest. For more information about the recording device, see the paper we recently published in Methods in Ecology and Evolution.

Here you can find a paper about the Plugsonic suite, which will hopefully soon be published in the Journal of the Audio Engineering Society.

Our paper "Short-term effects of sound localization training in virtual reality" has now been published on Nature - Scientific Reports, and is available online here.

The paper "3D Tune-In Toolkit: An open-source library for real-time binaural spatialisation" has now been published on PlosONE and is available online at this link. This paper describes in depth the implementation of the 3D Tune-In Toolkit binaural spatialisation algorithm, which can be accessed open-source in the project's GitHub account.

How can Batman use sound to improve the heads up display on the Batsuit to avoid sensory overload? Listen to the latest School of Batman podcast.

Areas of interest

Binaural auralization: psychophysiology of spatial hearing, binaural spatialisation algorithms, HRTF measurement and synthesis, performance of individualized and non-individualized HRTFs, HRTF adaptation

Acoustics: creation and auralization of room models, room acoustics, musical acoustics, real-time 3D audio

Hearing science: spatial hearing, speech perception, VR-based hearing assessment and training

Audio-haptics interaction design and multimodal interfaces

Others: auditory displays, sonification, eco-acoustic monitoring, sound recording, mixing and mastering techniques

Current Research Projects

PAST RESEARCH PROJECTS

3D Tune-In - Lorenzo Picinali was the Project Coordinator for this EU-H2020 ICT project on the calibration and evaluation of hearing aid devices using acoustic VR and videogames.

PLUGGY - Pluggable Social Platform for Heritage Awareness and Participation - Lorenzo Picinali was the Imperial College PI on this EU-H2020 project aimed at promoting citizens' active involvement in Europe's rich cultural heritage. Imperial's work will focus on the development of an application for creating sonic narratives from non-audio materials, and will lead the user evaluation.

HRTF selection and adaptation - in collaboration with Dr. Brian FG Katz (LIMSI-CNRS, France). The cues that human listeners use for sound localisation are dependent on many physical factors and are therefore highly individualised. The size of the head or the shape of the ears, for example, change the way that sounds are filtered when emanating from a given direction. This is problematic for applications using virtual audio where economic or temporal constraints make the measurement of an individual’s direction-dependant filter, or Head-Related Transfer Function (HRTF), infeasible. In these situations, HRTFs must be taken from an existing database measured from other individuals or dummy heads. Fortunately, it has been shown that the brain is able to adapt to modified cues for sound localisation but often this takes too long to be practical for consumer, scientific or medical applications. Recent studies, however, suggest that this time can be substantially reduced using active perceptual learning methods. A virtual reality application is being developed to investigate the efficacy of gamification techniques to further expedite the process and increase effectiveness of training.

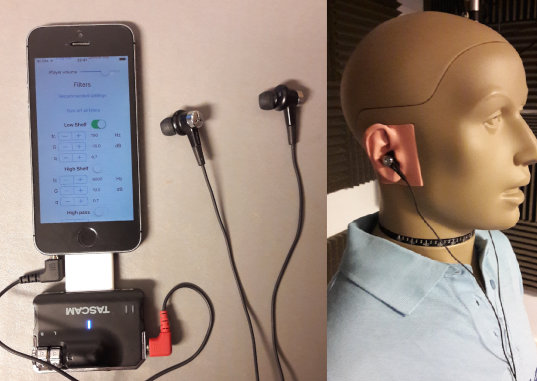

Acoustic Augmented Reality - in collaboration with Isaac Engel (currently PhD student within the Dyson School of Design Engineering). Augmented Reality (AR) consists in adding an audio-visual digital layer on top of the world, changing the way we perceive it through our senses. The acoustic component of AR plays an essential role in creating an immersive experience for the user; in theory, with the help of 3D audio techniques we can blend real sounds with computer-generated ones so that the latter is indistinguishable from the first. Current work focuses in developing audio systems for Acoustic AR and studying their impact in spatial perception and user experience.

Autonomous monitoring of rainforest biodiversity via acoustic signal processing - in collaboration with Sarab Sethi, Rob Ewers and Nick Jones (Imperial College London). Sound carries substantial information about local biodiversity, being used for navigation and communication by a wide range of taxa. Acoustic information is now commonly used to assist in point-surveys of many of these species, aiding the identification of bats, grasshoppers, birds, amphibians, and even for identifying individual animals. Furthermore, acoustic data has been used to create metrics of α and β diversity that correspond to changes in species diversity and turnover. Studies published to date, however, share the limitations that they (1) tend to record acoustics for short periods only; and (2) analyse the data at slower than real-time speed. Our goal is to develop and implement continuous, automated monitoring of rainforest biodiversity, and use those data to investigate the effects of logging on tropical biodiversity.

Invisible Puzzle and ZebraX - two interactive audio-based apps (iOS) for visually impaired and blind individuals for understanding shapes through sound (Invisible Puzzle) and for detecting pedestrian crossings with the mobile phone's camera and receive audio guidance towards aligning and crossing operations (ZebraX). Download the invisible puzzle app from the Apple Store!

VR Interactive Applications for the Blind - in collaboration with Brian FG Katz (Institut Jean Le Rond d'Alembert, Paris) and Tony Stockman (Queen Mary University of London) - see also the video interview

Audio Tactile Maps - See also previous versions of the ATM app.

Loss and Gain - Assisting hearing, expanding listening - in collaboration with Dr. Pete Batchelor (MTI, De Montfort University) and Dr. Ximena Alarco

The sound of proteins - in collaboration with Dr. Huseyin Secker and Charalambos Chrysostomou (CCI - De Montfort University)

Developed tools (HW & SW)

BUGG – Intelligent real-time eco-acoustic monitoring - https://www.bugg.xyz/

Safe Acoustics portal. http://acoustics.safeproject.net/

Ecosystem monitoring tools. https://sarabsethi.github.io/autonomous_ecosystem_monitoring/

The BEARS applications suite - https://www.youtube.com/watch?v=cvPReMtBGlk&t=100s

The 3D Tune-In Toolkit. A standard C library for binaural spatialisation, hearing loss and hearing aid emulation. https://github.com/3DTune-In/3dti_AudioToolkit/

ANAGLYPH. A binaural spatialisation tool (VST Plugin). http://anaglyph.dalembert.upmc.fr/

COMPLETE PUBLICATIONS LIST