AI sets heart imaging free

MRI is one of the most effective ways to assess heart health, yet only a small proportion of patients have access to the technique.

SmartHeart set out to change that.

Magnetic resonance imaging (MRI) is one of the most effective ways to assess the health of a heart, yet only a small proportion of patients have access to the technique. SmartHeart set out to change that, using the power of artificial intelligence (AI) to make cardiac MRI faster to acquire, easier to use, and even more powerful as a diagnostic tool.

Unlike imaging techniques such as an X-ray or ultrasound, an MRI scan does not produce an image straight away. Instead, it generates a mass of data that needs to be converted mathematically into an image, a process known as reconstruction. That image is then analysed by the doctor, and used to inform their diagnosis.

“Through SmartHeart, we wanted to automate the entire process, from getting the data to the diagnosis,” says Daniel Rueckert, principal investigator on the project and Professor of Visual Information Processing at Imperial College London. “We wanted it to become a one-stop shop, where you get the diagnosis directly from the scanner.”

After nearly six years of work, SmartHeart has achieved this goal, and gone further, generating even more clinically meaningful information from each scan. “Now you get a more comprehensive overview of each patient’s heart, and can therefore make better decisions.”

SmartHeart brings together researchers who usually work separately on different aspects of the MRI process. “The people working on the physics side of MRI engineer better scanners and better ways of measuring data, and then the data is handed over to the people who work on the AI side, and they are then able to analyse the data,” explains Professor Rueckert.

Professor Daniel Rueckert, Imperial College London

Professor Daniel Rueckert, Imperial College London

“What we have successfully done in this project is to combine the AI approaches with the imaging physics approaches, to develop an integrated approach, where the AI techniques are built into the scanner and also determine how we collect data, and how we directly interpret the data, before we even have images.”

Teaching AI to read

The main limit on patient access to cardiac MRI is the expertise needed to make sense of the images. The process is still very hands-on: an experienced radiographer or cardiologist sits in a darkened room and painstakingly draws outlines of the heart’s chambers on a series of images. From these outlines, a single figure is calculated, which represents the heart’s pumping efficiency.

“It takes me about 20 minutes to do that from scratch with a clinical scan,” says Steffen Petersen, Professor of Cardiovascular Medicine at Queen Mary University of London. “It’s fairly boring work, but I need to be an expert to do it.”

The first way that AI can help is to take this analytical task out of human hands. “Doctors know what MR images typically look like, they know how the heart typically looks and moves in these images, and they use all that knowledge when they are making measurements from those images,” explains Dr Andrew King, a Reader in Medical Image Analysis in the Biomedical Engineering Department at King’s College London. “So, the challenge for us is how to bring that knowledge into the AI.”

Codifying that knowledge and programming an AI system to carry out the analysis is a complex task that has not proven particularly successful. The alternative is machine learning: show an AI programme designed to learn a lot of MR images, along with the correct expert interpretation, then ask it to repeat the process with new images.

The AI tools can do in a second what it takes me 20 minutes to do”

The raw material to do this is available in resources such as the UK Biobank. “This has up to 100,000 MRI scans, along with the interpretation and diagnosis. That means we finally have enough data to train our AI networks really well,” says René Botnar, Professor of Cardiovascular Imaging at King’s College London.

And the results are impressive. “The AI tools can do in a second what it takes me 20 minutes to do,” says Professor Petersen. “You can immediately see the value of that to the NHS.”

Not only are cardiologists and other specialists freed up to concentrate on patient care, but cardiac MRI can be made available in hospitals that do not have the experienced personnel of specialist centres.

Automation in this way also has the potential to reduce variations in interpretation if patients move from one centre to another, or have a series of scans to follow the effect of a new medication.

“If your pumping function suddenly becomes better, it is important to be confident that it really has improved because of a new medication, as opposed to a variation in the analysis of the images,” says Professor Petersen.

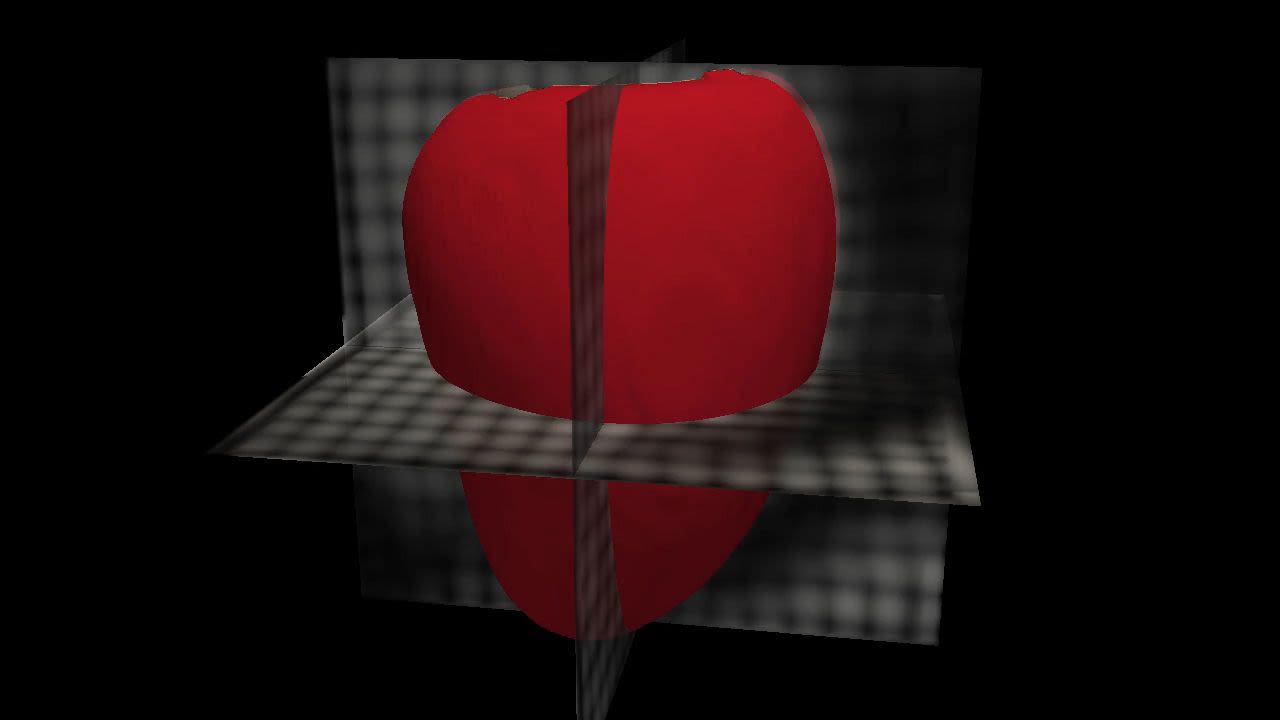

Medical images and 3D models of the heart

Medical images and 3D models of the heart

A moving target

AI can also be used elsewhere in the MRI process, speeding it up and making it a better experience for patients. At the beginning of the process, the main challenge is that the heart is not still, moving with each heartbeat and in time with the patient’s breathing. This means that capturing image data and interpreting it correctly is extremely challenging.

The conventional approach it to ask the patient to hold their breath, and then to take a series of scans to make sure of a good image. “Usually, when you do a cardiac MRI exam, you have to do 50 or 60 breath holds,” says Professor Botnar. “That is easy when you are young, but a 70-year-old patient with a heart condition may have problems doing that for half an hour or more.”

Professor René Botnar, King's College London

Professor René Botnar, King's College London

So, Professor Botnar and Professor Claudia Prieto have been working on methods that allow the patient to breathe freely during the scan.

“What we do is acquire a low-resolution image of the heart, from the imaging data itself. We acquire such an image for every heartbeat, and we can measure the motion in the foot to head and left to right directions, and use this information to correct for respiratory motion.”

This can be done through conventional image registration but is much faster if an AI program is taught to make the correction. “Instead of taking a minute to estimate the motion, now it takes two seconds.”

It is even possible to do the motion estimation from the raw data, which eliminates problems with image artefacts, features of the scan created by the reconstruction process that bear no relation to the structure of the heart.

“The faster you scan, the fewer data you have to reconstruct an image, and there are more and more artefacts,” says Professor Botnar. “And if you have an image with artefacts, that also means that your motion correction may have artefacts. If you work directly from the raw data, you avoid this problem, and that means you can go much faster.”

Professor René Botnar in the MRI facility at St Thomas's Hospital

Professor René Botnar in the MRI facility at St Thomas's Hospital

Cleaning the image

Artefacts and background noise also need to be taken into account when looking at the images, and AI has already been used to make this task easier. But SmartHeart has gone further.

“Normally we worked with ready-made images, and if they had some noise or artefacts, we took them as a given and filtered the noise out, just to make things work,” says Julia Schnabel, Professor in Computational Imaging at King’s College London. “But now we have thought backwards about where all these artefacts and poor, degraded images originate, and what the causes are.”

What she and Dr King found is that some of the artefacts can be modelled and then used to train the AI models to recognise the good quality image hiding behind the bad. “We can synthetically degrade images, then show the AI pairs of good and bad versions of the same image, and then the algorithm learns how to go from one to the other.”

The next step is to go back even further, before reconstruction of the image. “We can also train the AI to go from raw sensor data to images, so it learns the reconstruction method,” says Professor Schnabel. “You can also combine all these things. You can give the AI degraded raw sensor data, or heavily under-sampled sensor data, basically data that is not complete, and which is acquired very quickly.”

Professor Julia Schnabel, King's College London and Technical University of Munich

Professor Julia Schnabel, King's College London and Technical University of Munich

This makes the whole MRI process faster and more efficient. “By linking together the acquisition, the reconstruction and the downstream analysis, we’ve shown that we don’t really need to have the best quality image in order to get the important information,” says Dr King.

“You can use that finding to optimise the acquisition process, to just acquire the data that you need to make the measurements that you need. That doesn’t mean that you don’t end up with a diagnostic quality image – doctors still need that image – but we have found that a lot of the information that was being acquired before was not necessary for the image to be of diagnostic value or to acquire the numbers that they need.”

More information about the heart

These numbers represent additional diagnostic information that can be deduced from the MRI scan. “Traditionally doctors acquire these amazingly complex images and boil it down to one number, the left ventricular ejection fraction, which indicates how efficient your heart is as a pump,” Dr King explains. “They don’t use that single number because they think it’s enough, they use it because it would take far too much work to generate all the other numbers they would like to see.”

As well as automating the estimation of that number, SmartHeart has used AI to produce a range of other functional biomarkers that describe different aspects of how well the heart is working. “That has the potential to lead to a lot of future clinical research to improve patient treatments, and I think that’s one of the most exciting things that has come out of the project.”

This AI has been developed into a web-based app that doctors are already using. “They simply load in the images, and the app will analyse those images and produce an automated clinical report,” Dr King says.

“In 10 seconds they can have the left ventricular ejection fraction and about 50 other numbers, which they can use to get a much richer picture of how well the heart is working. And that works robustly across a range of different scanners.”

A short-axis basal view of the heart

A short-axis basal view of the heart

This system has also been licensed to a company building a software platform for clinical trials, where it will be used to monitor changes in heart health in response to new drug treatments.

A further result of SmartHeart is that the scanner produces a quantitative map of the heart, in addition to the high-resolution image. This tells doctors about conditions such as fibrosis and oedema in certain areas of the heart, and allows progress in these conditions to be followed over time.

“If you have numbers, you can compare them between exams and see much more clearly if a treatment has worked or not,” says Professor Botnar.

From the lab to the clinic

The image analysis app for doctors is the first aspect of SmartHeart to be used in patient care. Other developments, such as approaches to faster data acquisition and quality assurance protocols, are almost ready for clinical deployment. “We haven’t done a clinical study yet, but we have used real clinical data and evaluated it against our own clinicians, so we are getting more and more confident that this could be a product,” says Professor Schnabel.

An important part of this translation process is collaboration with the MRI scanner manufacturers. The researchers at King’s have worked closely with Siemens on a pre-product, with a full-blown product to follow at the end of 2022 or the beginning of 2023. “That would be a smart cardiac scanner that is 3D, free-breathing, and corrected in every respect,” says Professor Botnar. “It would be like a modern auto-focus camera, where you don’t have to worry much about anything.”

Another approach that has emerged from SmartHeart is being developed with Philips. “Instead of maybe 10 very nice-looking images, from which you get your quantitative image, you acquire 1,000 pretty ugly looking images,” says Professor Botnar. “These just look like noise, but what we can do is look at every pixel, and follow the signal evolution through all 1,000 images, and that produces a fingerprint [for the condition of the heart].”

This fingerprint can then be compared to a dictionary built from simulations that indicate factors such as iron content, fibrosis, and alignment of the heart cells. This approach was first developed at Case Western Reserve University in the USA, and SmartHeart is only the second group to apply it to cardiac imaging.

Dr Andrew King and a colleague discuss scan images

Dr Andrew King and a colleague discuss scan images

Confidence and quality

The logical conclusion of SmartHeart is that the image in cardiac MRI might be altogether dispensable, with the diagnosis coming straight from the AI. Professor Rueckert thinks this is possible, but not yet desirable. The images still play an important role. “We want to make sure that the AI approaches we use generate images that are plausible,” he says.

The images allow experienced clinicians to check the results if they have concerns, and this is supported by a quality control system developed at Imperial that flags up any results where the AI itself lacks confidence. “That’s extremely helpful,” says Professor Petersen. “At Barts Hospital, we do 30 scans a day, so if I only need to look at the ones where the algorithm tells you it is slightly uncertain, while the others can be trusted, that is much better than having to check all the automated analyses.”

Another issue, which requires further investigation, is the possibility that the AI system may contain hidden biases because of the data with which it has been trained. This issue has already surfaced with facial recognition software, which works better with some ethnicities than others, and similar effects have been found with cardiac MRI.

“That might seem surprising, because you would think that an MRI scan can’t ‘see’ somebody’s race or ethnicity,” says Dr King, “but we have found that if you train an AI model on MRI scans from one ethnic group, it doesn’t work so well on other ethnic groups, for example. So that is a whole new area to consider in terms of making AI fair and equitable, so that it benefits everyone equally.”

Dr Andrew King, King's College London

Dr Andrew King, King's College London

Beyond SmartHeart

SmartHeart has achieved a lot in six years, and also generated some exciting prospects for the future. For Professor Botnar, the important next step is to get the prototype scanners out into as many hospitals as possible. “I usually want to know: when does it fail? So, let’s give people the opportunity to test it, and give us feedback on what works and what doesn’t work.” He also thinks there is scope to broaden the numbers collected from the MRI, and from complementary techniques, to say more about how a patient’s condition may develop. “I think that’s the next thing to focus on: not only diagnosis, but prediction.”

Professor Rueckert thinks there is scope to apply the lessons of SmartHeart to imaging other organs that move within the body, such as the liver and the pancreas. “The heart is a good starting point, because it is perhaps the most challenging organ to image, but a lot of these techniques can be used in other organs and for other diseases, for example for characterising cancer.”

Professor Schnabel agrees about the potential of looking at other organs, and also thinks it would be interesting to apply this approach to other imaging methods, such as computed tomography, X-rays or ultrasound. “Intelligent imaging, and the principle that you use AI not just as an afterthought but to produce better images in the first place, or interpret them before you even visualise them, opens up so many options for us.”

For Dr King, a fruitful way forward would be to use AI to further inform medical decision-making. “We’ve provided lots of information to doctors, but we haven’t addressed how to help them make better decisions. In order to use AI for that kind of decision support, you need an AI that can talk to doctors in the language they use when they talk to each other. If we could address that decision support role for AI, that would have a lot of added value.”

Finally, Professor Petersen would like to see AI used to spread the expertise necessary to offer cardiac MRI more widely. “There are ways AI can help with training on how to acquire images, interpret images, analyse images and write reports, to standards that clinicians could then be signed off as sufficiently qualified to run their own centres,” he says. “Some face-to-face experience is always going to be needed in that kind of training, but this could be in a much more remote and standardised way, that could provide opportunities to people around the world.”

But more than anything, he would like to see the results of SmartHeart put to use. “As a clinician, I would love to see those tools, and tools that are still being developed, deployed in clinical practice.”

Learn more about SmartHeart

SmartHeart was made possible by a Programme Grant from the Engineering and Physical Sciences Research Council. These grants provide flexible funding to world-leading research groups, to address significant major research challenges, often working across disciplinary boundaries.

The SmartHeart project brought together research teams working on:

- AI methodology development at Imperial College London

- AI, MR imaging and the physics of data collection at King’s College London

- the clinical application of cardiac MRI at Queen Mary University of London

- spatio-temporal data handling at the University of Oxford.