New machine learning tool converts 2D material images into 3D structures

A new algorithm developed at Imperial College London can convert 2D images of composite materials into 3D structures.

The machine learning algorithm could help materials scientists and manufacturers to study and improve the design and production of composite materials like battery electrodes and aircraft parts in 3D.

Our algorithm allows researchers to take their 2D image data and generate 3D structures with all the same properties, which allows them to perform more realistic simulations. Steve Kench Dyson School of Design Engineering

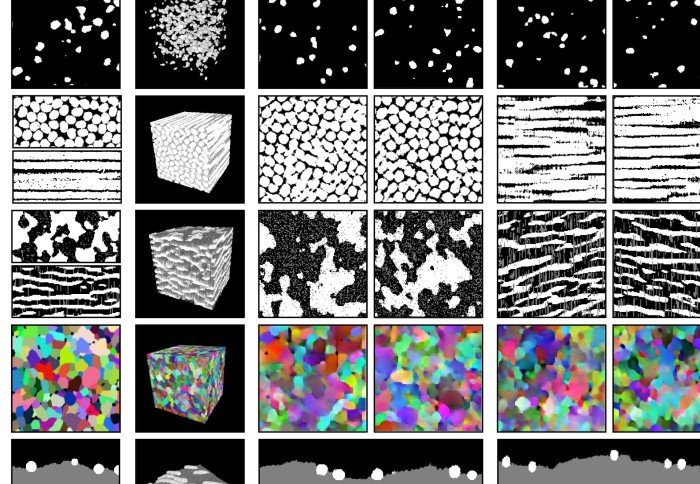

Using data from 2D cross-sections of composite materials, which are made by combining different materials with distinct physical and chemical properties, the algorithm can expand the dimensions of cross-sections to convert them into 3D computerised models. This allows scientists to study the different materials, or ‘phases’, of a composite and how they fit together.

The tool learns what 2D cross-sections of composites look like and scales them up so their phases can be studied in a 3D space. It could in future be used to optimise the designs of these types of materials by allowing scientists and manufacturers to study the layered architecture of the composites.

The researchers found their technique to be cheaper and faster than creating 3D computer representations from physical 3D objects. It was also able to more clearly identify different phases within the materials, which is more difficult to do using current techniques.

The findings are published in Nature Machine Intelligence.

Lead author of the paper Steve Kench, PhD student in the Tools for Learning, Design and Research (TLDR) group at Imperial’s Dyson School of Design Engineering, said: “Combining materials as composites allows you to take advantages of the best properties of each component, but studying them in detail can be challenging as the arrangement of the materials strongly affects the performance. Our algorithm allows researchers to take their 2D image data and generate 3D structures with all the same properties, which allows them to perform more realistic simulations.”

Studying, designing, and manufacturing composite materials in three dimensions is currently challenging. 2D images are cheap to obtain and give researchers high resolution, wide fields of view, and are very good at telling the different materials apart. On the other hand, 3D imaging techniques are often expensive and comparatively blurry. Their low resolution also makes it difficult to identify different phases within a composite.

For example, researchers are currently unable to identify materials within battery electrodes, which consist of ceramic material, carbon polymetric binders, and pores for the liquid phase, using 3D imaging techniques.

3D imaging these materials in enough detail can be painstaking. We hope that our new machine learning tool will empower the materials design community. Dr Sam Cooper Dyson School of Design Engineering

In this study, the researchers used a new machine learning technique called ‘deep convolutional generative adversarial networks’ (DC-GANs) which was invented in 2014.

This approach, where two neural networks are made to compete against each other, is at the heart of the tool for converting 2D to 3D. One neural network is shown the 2D images and learns to recognise them, while the other tries to make “fake” 3D versions. If the first network looks at all the 2D slices in the “fake” 3D version and thinks they’re “real”, then the versions can be used for simulating any materials property of interest.

The same approach also allows researchers to run simulations using different materials and compositions much faster than was previously possible, which will accelerate the search for better composites.

Co-author Dr Sam Cooper, who leads the TLDR group at the Dyson School of Design Engineering, said: “The performance of many devices that contain composite materials, such as batteries, is closely tied to the 3D arrangement of their components at the microscale. However, 3D imaging these materials in enough detail can be painstaking. We hope that our new machine learning tool will empower the materials design community by getting rid of the dependence on expensive 3D imaging machines in many scenarios.”

This project was funded by EPSRC Faraday Institution Multi-Scale Modelling project.

“Generating three-dimensional structures from a two-dimensional slice with generative adversarial network-based dimensionality expansion” by Steve Kench and Samuel J. Cooper, published 5 April 2021 in Nature Machine Intelligence.

Images: Steve Kench, Imperial College London

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Caroline Brogan

Communications Division