Ranked as the second most innovative university in Europe, and in the top ten in the world, Imperial College London is home to world-leading academic researchers. At Imperial, we value research that applies academic curiosity and rigour to real-world business challenges, bringing tangible benefits to society.

Among the most effective ways for businesses to benefit from our world-leading academic expertise are to partner with us in collaborative research and to licence our technologies.

To see how research partnerships are helping to meet industry partners' challenges, you can browse the case studies hosted here. To discuss what sort of collaboration or licensing opportunities would best meet your needs, please contact a member of the industrial liason team.

Precision Agriculture

Introduction to the purpose of the research

Introduction to the purpose of the research

One aspect of the DCE programme’s Grand Challenge of ‘Resilient and robust infrastructure’ is to develop adaptive machine learning techniques for changing environments. This area of work seeks to combine reliable sensing technologies with online data analytics to provide continuous learning, prediction and control in changing environments to guide the design, construction and maintenance of critical infrastructures. The development of such end-to-end sensor systems and algorithms for measuring physical infrastructure has applications in a wide range of real-world situations, including precision agriculture.

What is Precision Agriculture, and how does it serve as a solution?

The ultimate goal of precision agriculture is to optimise the sustainability and quality of its products and processes. It requires that farmers use sensor networks to become intimately acquainted with the state of their crops and soil at any given time and location in their fields. By understanding what is happening in sufficient detail, farmers can tailor inputs such as fertiliser and water, and disease outbreaks quickly identified. In this way, inputs are optimised, crop yields are maximised, and through more judicious use of pesticides, harm to the soil and biodiversity are minimised. Professor McCann, Dr. Po-Yu Chen and the team have designed a unique system able to transform a passive sensing network into a control network – a crucial innovation for precision farming.

How did the project start and what did the researchers do?

To develop and demonstrate such a system on a smaller scale, Professor McCann and Dr. Chen approached Ridgeview, a vineyard based in the South Downs in Sussex, with a proposal to develop an end-to-end monitoring system of their vineyards. Building on a previous EPSRC-funded project with Ridgeview to monitor energy, temperature and water use within the winery building, the vineyard provided a small-sized, manageable system where the researchers could develop this technology further. The researchers placed sensors every 50 metres throughout the wine estate and within the winery building. This set-up makes it possible to measure how the air around the vineyard changes in terms of humidity and temperature, and to measure soil moisture content at both the micro and macro levels across the fields of vines as well as water and energy use across the areas.

What happened?

The initial goal was to model, in as close to real-time as possible, the state of the fields. These data are then fed into a life-cycle analysis, delivered in collaboration with researchers from the University of Exeter. This work could eventually allow Ridgeview to understand small changes in different vineyard areas, helping them identify potential disease hotspots and understand localised conditions or trends in microclimates across the vineyard. Not all the work on the project can take place in a working vineyard, so much of the project is being done in the laboratory. A research challenge is how to make sense of data trickling in from potentially hundreds of sensors, at different times. The trick is to process the data so that they remain robust, even if parts of the network degrade – as they always will “in the wild” – so an adequate prediction can be made of the current state of the field.

Future plans for the project

The development of sensor networks to read the state of a farm at high resolution will facilitate sustainable practices. However, for agriculture more broadly, the sensor technology is only half of the challenge. The other half is to exploit the information gathered by a sensor network when making practical decisions, such as using actuators to add fertiliser or water in the appropriate amounts and locations. The longer-term aims of the work would be to create a farm that can not only feel itself but also feed itself.

Improved understanding and reliability of such systems increase their potential for scaling in applications beyond agriculture and farming to systems that are more complex and more critical, such as water networks. With the successful application of an end-to-end sensor and control network, water pipe networks could be mostly self-regulating, improving resilience and their ability to mitigate failures and leaks and be better matched to demand.

Related people

|

Data-Centric Engineering Strategic Leader |

|

Co-Organiser of Sustainable Infrastructure Project |

Funders

Collaborators

Introduction to the purpose of the research

Introduction to the purpose of the research

Machine health monitoring is crucial to maintain machine operation within its nominal and safe boundaries, which guarantees best performance and longer machine lifetime. Current machine sensors are either contact or contactless. Contact sensors are mounted on the machine body, while contactless sensors require reflective tape installation on the machine body. Both sensor types introduce installation or replacement procedure that increases machine stoppage hours. Moreover, most of the contactless sensors rely mainly on optical beams to capture machine motion, which forces the sensor to be in line-of-sight field of view with respect to the machine. This can be a challenge in several conditions where machines are kept inside enclosures or even isolated rooms. For example have you ever though how you can validate the rotation speed of our washing machine or air-condition internal fan?

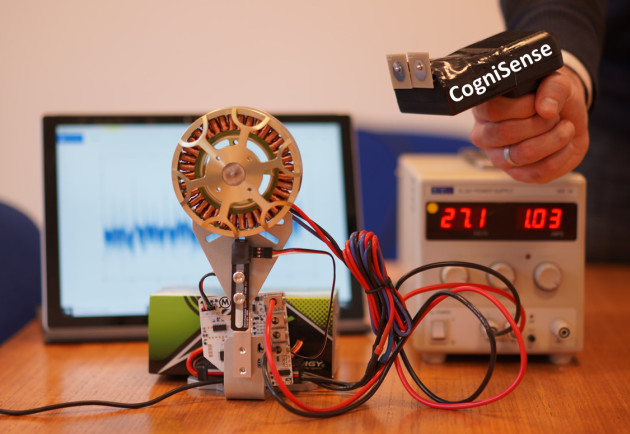

What is Cognisense and how does it serve as a solution?

CogniSense introduces a non-invasive technique to capture the machine motion through using radio frequency signals without mounting any sensor or reflective tape on the machine. In this technique, electromagnetic waves are used as sensors that can precisely capture different machine parameters such as its rotation speed, vibration, and temperature. The nice value introduced through using electromagnetic waves is its propagation ability through walls or enclosures. This means that not only CogniSense can provide online contactless non-invasive machine monitoring, but also it presents beyond the technician/engineer line of sight machine monitoring. Now many inaccessible machines can be monitored using CogniSense.

How did the project start and what did the researchers do?

RF shadowing theme in AESE group is mainly focusing on exploring different interaction mechanisms between electromagnetic waves and surrounding environment. For example, determining moisture level inside fruits or UAV self-monitoring applications. This leads to the discovery of some machine motion impact on transmitted RF signal that really exist in our lab such as rotating fans.

What happened?

To be able to precisely capture the rotation speed of those machines, we conducted a deep research to design special antenna with predetermined features that can transmit and receive specific type of electromagnetic waves to capture machine rotational motion. Antenna design was not the end of the story, however we also had to design our dedicated software algorithm that can analyse the scattered signal from the machine and conclude its speed. We conducted several tests inside and outside our lab to validate our invention. We had successive collaborations with other departments such as biomedical engineering and with industrial facilities such as Grant Instruments. Those collaboration helped us to conduct real field experiments to validate CogniSense accuracy that approaches now 100%.

Future plans for the project?

From a research point of view, we have a plan to explore more machine properties such as vibration and temperature. Also, we plan to increase the sensor field of view on two levels: 1) Machine level: to be able to capture multiple machine properties, and 2) Component level: to be able to capture multiple component properties within single machine.

From commercial point of view, currently CogniSense is patented with reference patent number US20190257711A1. We plan to launch a spin out company to present this technology to the industry. We are confident that new values that our product is bringing will represent a step change in the health monitoring sensor market.

Related people

|

Professor Julie McCann |

|

|

Dr. Mohammad Heggo |

Funders

Imperial Innovations, Alan Turing Institute

What is Graphics Fuzz?

What is Graphics Fuzz?

GraphicsFuzz, a tool that automatically finds bugs in compilers for graphics processing units (GPUs), is based on fundamental advances in metamorphic testing pioneered by Donaldson’s Multicore Programming research group at Imperial College. GraphicsFuzz has found serious, security-critical defects in compilers from all major GPU vendors, leading to the spin-out of GraphicsFuzz Ltd., to commercialise the research. GraphicsFuzz Ltd. was acquired by Google in 2018 for an undisclosed sum, establishing a new London-based team at Google focusing on Android graphics driver quality.

Google have open-sourced the GraphicsFuzz tool, and it is now being used routinely to find serious defects in graphics drivers that affect the Android operating system (estimated 2.5 billion active devices worldwide) and Chrome web browsers (used by an estimated 66% of all internet users). The GraphicsFuzz project was enabled by two previous research projects from Donaldson’s group that have also led to industrial impact: GPUVerify, a highly scalable method for static analysis of GPU software, which ARM have integrated into their Mali Graphics Debugger tool, and CLsmith, an automated compiler testing tool that has found bugs in OpenCL compilers from Altera, AMD, Intel and NVIDIA.

The problems GraphicsFuzz helps to solve?

Graphics processing units are massively parallel, making them well-suited to accelerating computationally-intensive workloads, such as the machine learning and computer vision tasks required for autonomous driving, as well as enabling high-fidelity computer graphics. GPU programs and device drivers always need to be highly optimized: speed of execution is the sole reason for using GPU hardware. It is fundamentally challenging to build highly-optimized software with a high degree of reliability, yet GPU technology needs to be reliable: errors can be catastrophic when GPUs are used in safety-critical domains (such as autonomous vehicles), and malfunctioning graphics drivers render consumer devices useless by preventing their displays from working. The work underpinning the GPUVerify, CLsmith and GraphicsFuzz tools was motivated by the exciting challenge of how to design techniques to aid in building correct and efficient GPU software and compilers.

This industrial uptake of GPUVerify, CLsmith and GraphicsFuzz – all based on fundamental research from Imperial College London – is helping to make GPU software safer and more secure for end users, and to reduce the development costs of GPU software and associated device drivers.

What did the researchers do?

Donaldson’s group have contributed to solving this challenge via (1) methods for reasoning about the correctness of programs designed for GPU acceleration, and (2) techniques for automatically testing GPU compilers to check that they faithfully translate high-level GPU programs into equivalent GPU-specific machine code. The main challenge associated with (1) is that GPU programs are typically executed by thousands of threads, requiring highly scalable analyses. The difficulty associated with (2) is that graphics programming languages are deliberately under-specified, so that no oracle is available to determine whether a given program has been correctly compiled.

What happened?

The GPUVerify tool is based on a novel method for transforming a highly-parallel GPU program – executed by thousands of threads – into a sequential program that models the execution of an arbitrary pair of threads such that data race-freedom (an important property of concurrent programs) for the parallel GPU program can be established by analysing the radically simpler sequential program [1, 2]; this allows decades of work on sequential program verification to be leveraged.

While GPUVerify can provide formal guarantees about the source code of a GPU program written in Open Computing Language (OpenCL), these guarantees are undermined if the downstream compiler that turns OpenCL into GPU-specific machine code is defective. This inspired the CLsmith project for automated testing of OpenCL compilers (extending the Csmith project for testing of C compilers, from the University of Utah).

CLsmith uses novel methods to generate OpenCL programs in a randomized fashion such that each generated program is guaranteed to compute some well-defined (albeit unknown) result [R3]. This enables differential testing: if two different OpenCL compilers yield different results on one of these well-defined programs, at least one of the compilers must exhibit a bug. Automated program reduction techniques can then be used to provide small OpenCL programs that expose the root cause of compiler bugs [R4]. Inspired by the success of CLsmith, GraphicsFuzz (originally called GLFuzz) is an automated testing tool for compilers for the OpenGL Shading Language (GLSL) [5, 6].

The key innovation behind GraphicsFuzz is to apply metamorphic testing to compilers, based on the fact that we should expect two equivalent, deterministic programs to yield equivalent, albeit a priori unknown, results when compiled and executed. The tool takes an initial graphics program and uses program transformation techniques to yield many equivalent programs, looking for divergences in behaviour. Such divergences indicated compiler bugs. To shed light on the root causes of such bugs, transformations can be iteratively reversed, to search for pair of equivalent programs that differ only slightly, but for which the compiler under test generates semantically inequivalent code.

Related people

|

|

|

Hugues Evrard, research associate Jeroen Ketema, research associate Paul Thomson, research associate Adam Betts, research associate Nathan Chong, PhD student Andrei Lascu, PhD student |

References to the research

[1] A. Betts, Nathan Chong, Alastair F. Donaldson, Shaz Qadeer, Paul Thomson: GPUVerify: a verifier for GPU kernels. ACM OOPSLA 2012, pp. 113-132. Google Scholar cites: 159. DOI: 10.1145/2384616.2384625, Open access version

[2] A. Betts, N. Chong, A.F. Donaldson, J. Ketema, S. Qadeer, P. Thomson, J. Wickerson: The Design and Implementation of a Verification Technique for GPU Kernels. ACM Trans. Program. Lang. Syst. 37(3): 10:1-10:49, 2015. Google Scholar cites: 41. DOI: 10.1145/2743017, Open access version

[3] C. Lidbury, A. Lascu, N. Chong, A.F. Donaldson: Many-core compiler fuzzing. ACM PLDI 2015, pp. 65-76. Google Scholar cites: 92. DOI: 10.1145/2737924.2737986. Open access version

From freeing up time for busy households to assisting the elderly to lead an independent life, the home robotics market is expected to triple in size in the next five years, and the Dyson Robotics Lab is developing the technology that will enable home robotics to perform intelligent tasks without human intervention. The Dyson Robotics Lab (DRL) at Imperial College London was founded in 2014 as a collaboration between Dyson Technology Ltd and Imperial College. It is the culmination of a thirteen-year partnership between Professor Andrew Davison and Dyson to bring his Simultaneous Localisation and Mapping (SLAM) algorithms out of the laboratory and into commercial robots, resulting in Dyson’s 360 Eye vision-based vacuum cleaning robot in 2015 which can map its surroundings, localise and plan a systematic cleaning pattern.

From freeing up time for busy households to assisting the elderly to lead an independent life, the home robotics market is expected to triple in size in the next five years, and the Dyson Robotics Lab is developing the technology that will enable home robotics to perform intelligent tasks without human intervention. The Dyson Robotics Lab (DRL) at Imperial College London was founded in 2014 as a collaboration between Dyson Technology Ltd and Imperial College. It is the culmination of a thirteen-year partnership between Professor Andrew Davison and Dyson to bring his Simultaneous Localisation and Mapping (SLAM) algorithms out of the laboratory and into commercial robots, resulting in Dyson’s 360 Eye vision-based vacuum cleaning robot in 2015 which can map its surroundings, localise and plan a systematic cleaning pattern.

Computer vision is a key enabling technology for future robots and in the heart of the DRL’s research. The phase I £5m funding from Dyson has enabled Professor Davison to drive breakthrough research in scene understanding - from sparse reconstruction to dense reconstruction and semantic mapping. The focus has been to develop algorithms that run in real time using a handheld camera pushing the boundaries in developing compact representations enabled by the recent advances in machine learning. In 2019, the collaboration with Dyson was extended for another five years with the support of a match funding from EPSRC.

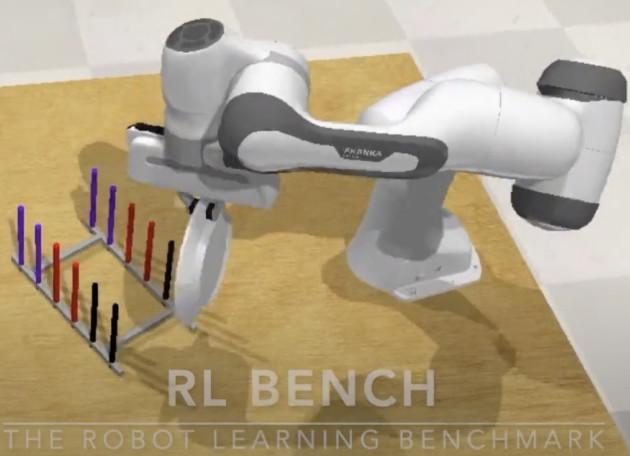

The aim is to push the frontiers in scene understanding beyond mapping and localisation. To perform true human like multi tasks, robots will need to be able to understand and interact with their environment in ways that was thought impossible a few years ago. DRL’s current research is pushing the frontiers of semantic mapping to 3D object maps - from predefined household objects to unknown shape structures and occlusions. Object understanding in turn allows planning and manipulation tasks from simple object picking to positioning and sorting. Generative class-level object models such as bottles, cups and bowls provide the right representation for principled and practical shape inference. Coupling this with a probabilistic engine which enables efficient and robust optimisation of object shapes from multi-depth images allow us to build an object-level SLAM system.

This object reconstruction enables robotic tasks such as packing objects into a tight box, sorting them by shape size or pouring water into a cup. Moreover, we look into robotic behaviours that will mimic that of a human. A robot should be able to manipulate its environment causing a minimal disturbance to its surrounding by choosing the path of least occlusion. Finally, training on real manipulation tasks is time consuming and impractical, thus developing approaches and dataset (RLBench) for training in simulation is driving our research to more efficient representations. To truly enable home robotics, robots should think and behave like humans without going through the same learning process. “It is truly remarkable to see multi-tasks performed in real time by a robotic arm that has only been trained in simulations.” Professor Andrew Davison

Related people

|

Professor Andrew Davison |

Useful contacts

- Tom Curtin

Industrial Liaison Officer

LinkedIn

- Prof. William Knottenbelt

Director of Industrial Liaison