Software

Open-source software from our lab. Most of it is based on personal software contributions from our lab members.

Disclaimer: It is research-level source code, so there is no support provided.

Hypergraph Q-Networks

The official code of our ICLR 2021 research paper, "Learning to Represent Action Values as a Hypergraph on the Action Vertices."- a class of RL agents based on "action hypergraph networks" intended for multi-dimensional, or high-dimensional, action spaces

- built on the TensorFlow and Dopamine frameworks

- includes implementations of a few baseline agents: DQN, Rainbow and Branching DQN (Tavakoli et al., 2018)

- contains training logs for a set of 35 benchmarking environments as well as scripts to plot them

- includes wrappers for action shaping and discretisation

- supports the most popular benchmarking environments: Arcade Learning Environments, OpenAI Gym, DeepMind Control Suite and PyBullet

Paper: https://openreview.net/forum?id=Xv_s64FiXTv

Talk: https://slideslive.com/38953681

Tonic: A Deep Reinforcement Learning Library for Fast Prototyping and Benchmarking

- a collection of configurable modules such as: exploration strategies, memories, neural networks, and optimizers

- a collection of baseline agents built with these modules

- support for the two most popular deep learning frameworks: TensorFlow and PyTorch

- support for the three most popular sets of continuous control environments: OpenAI Gym, DeepMind Control Suite and PyBullet

- a large-scale benchmark of the baseline agents on 70 tasks

- scripts to train in a reproducible way, plot results, and play with trained agents

Repository: https://github.com/fabiopardo/tonic

Data: https://github.com/fabiopardo/tonic_data

Q-map: Goal-Oriented Reinforcement Learning Software

Q-map uses a convolutional autoencoder-like architecture combined with Q-learning to efficiently learn to reach all possible on-screen coordinates in complex games such as Mario Bros or Montezuma's Revenge. The agent discovers correlations between visual patterns and navigation and is able to explore quickly by performing mutliple steps in the direction of random goals.

Paper: https://arxiv.org/abs/1810.02927Source code: https://github.com/fabiopardo/qmap

Videos: https://sites.google.com/view/q-map-rl

Robot DE NIRO – Autonomous Capabilities Software

This release contains implementation of DE NIRO’s state-of-the-art capabilities, including autonomous navigation, localization and mapping, manipulation, face-, speech- and object recognition, speech I/O, a state machine and a GUI. The software is integrated with ROS and written in Python. Online documentation is available.

Source code: https://github.com/FabianFalck/deniroDocs: https://robot-intelligence-lab.gitlab.io/fezzik-docs/

Video: https://www.youtube.com/watch?v=qnvPpNyWY2M

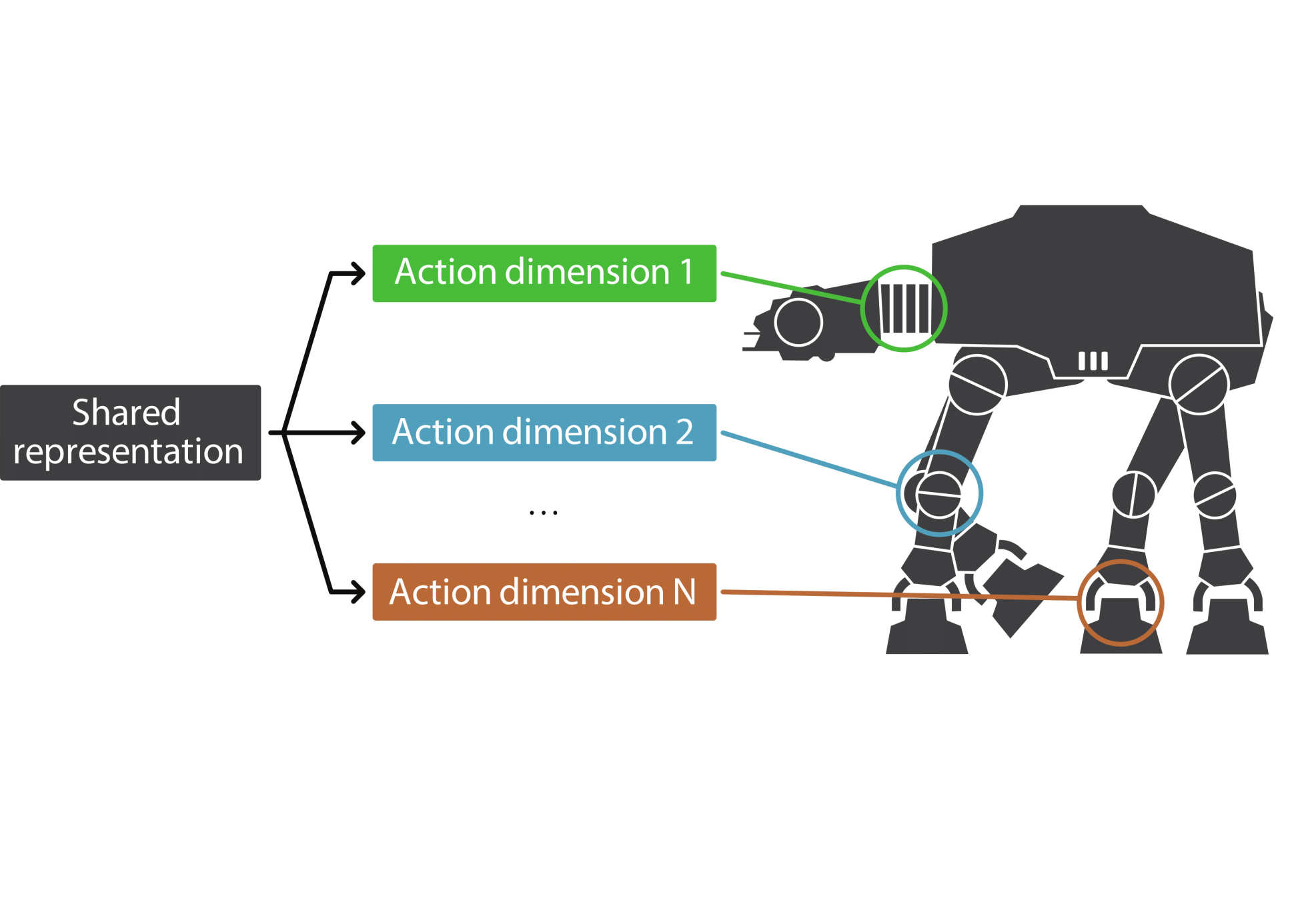

Branching Deep Q-Networks

The official code of our AAAI 2018 research paper, "Action Branching Architectures for Deep Reinforcement Learning."- a class of RL agents based on "action branching methods" intended for multi-dimensional, or high-dimensional, action spaces

- built on the TensorFlow and OpenAI-Baselines frameworks

- contains a set of pre-trained agents as well as a script to play with them

- includes a wrapper for action discretisation

- support for the OpenAI-Gym MuJoCo environments

- includes a set of custom physical reaching environments

Paper: https://arxiv.org/abs/1711.08946

Talk: https://youtu.be/u0dCuln_a-Q

Datasets

Datasets with recordings from the sensors of our robots during our research experiments. It can enable other researchers who do not have access to such robots to conduct research on perception, recognition, machine learning, etc.

Robot DE NIRO - Multi-sensor Dataset

This dataset contains a number of recordings from the sensors of Robot DE NIRO. The sensors include 2D and 3D laser scanners (LIDAR), cameras, RGB-D sensors (Kinect), ultrasonic and infrared proximity sensors, 360-degree panoramic camera rig, stereo vision, etc. The dataset is stored in ROS bag format and is rather large (a few GBs).

>>> Download coming soon... <<<

Contact us