Engineers adopt a flesh approach to making gaming characters more lifelike

by Colin Smith

New technique maps geometry of skin to make computer characters more realistic

A new technique will capture the subtle deformations in human skin and translate them into more realistic computer characters.

Film and game producers currently use technology called motion capture scanning to record an actor’s body movements while they are performing. This is translated into a computer and used to generate a character’s actions. Facial performances are also captured by the technology, but the incredibly subtle expressions created through folds in facial skin, especially around the eyes, nose and mouth, cannot be captured. As a result computerised faces are less lifelike.

Now researchers from Imperial College London and USC Institute for Creative Technologies, which is part of the University of Southern California, have developed a method for capturing the details of skin at resolution levels around ten microns or 0.01 millimetres. The technology images the microscopic geometry of patches of facial skin in various states of stretch and compression, which is then analysed and compared to the neutral uncompressed state of the skin. This enabled the team develop a model of how the skin deforms through facial expressions at the microscopic level. This advance could pave the way for more realistic characters in computer games and computer generated actors in movies.

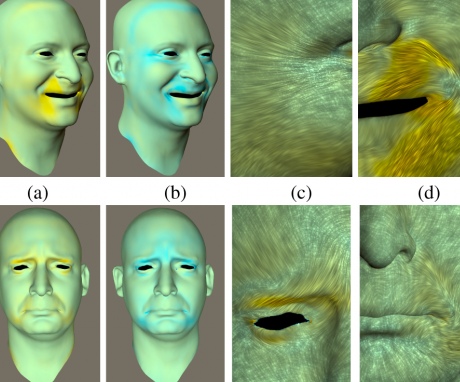

Above: The researchers have developed a technique that enables them to map microscopic changes in skin texture in stages to build up a more lifelike image of characters.

The research was led at Imperial by Dr Abhijeet Ghosh from the Department of Computing.

Dr Ghosh said: “Digital faces are becoming increasingly realistic when their facial expressions are static. However, the challenge going forward is to develop realistic faces when they are animated, which would heighten the movie and gaming experience for users. Our work takes us one step closer to that goal.”

Complex complexion

Human skin is complex tissue comprised of many layers. It exhibits a range of effects when it is deformed by facial movements at both mid-range and microscopic scales.

Modern scanning technology can currently capture how skin moves at the mid-scale resolutions of less than a millimetre. However, the dynamics of skin at the microstructure level, below a tenth of a millimetre, cannot be directly captured.

Virtual breakthrough

Above: This image (inset) shows how the microscopic detail of the skin's strucutre makes the computer generated image more realistic.

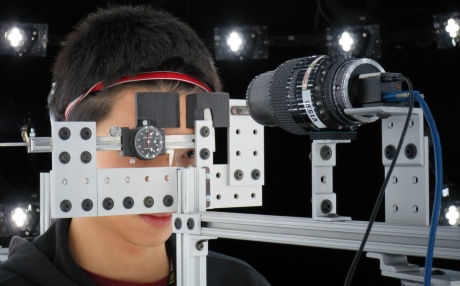

The measurement setup developed by the team consists of a number of components including a camera with a macrophotography lens that enables the researchers to take high resolution images of a patch of facial skin, and a specialised LED sphere to illuminate the skin patch with polarised spherical gradient illumination. This is a form of controlled lighting that enables the researchers to illuminate the subject under four different lighting conditions so that facial skin texture, shape and reflectance can be measured. The device also contains a skin measurement gantry that consists of callipers that either compress or stretch patches of the subject’s facial skin, which is recorded by the camera macro lens. The information is relayed to a computer, which builds a computerised map of the face on a microscopic level.

Above: The researchers placed a volunteer’s head inside (above) the LED sphere and photographed a patch of skin on the subject’s forehead under various deformations using an articulated skin deformer apparatus.

They were able to show with their digitised face model how the skin became shinier when it was stretched and rougher when it was compressed. Other features captured included how the skin microstructure blurred in the direction of stretching and sharpened in the direction where it was compressed.

They also demonstrated how the surface flattens when stretched and appears to bunch up when compressed, along with how light was reflected on the skin as it moved. Combined, these effects gave a more visceral sense of surface tension on the computer generated face and emphasized the different expressions.

The research was published this month in the ACM Transaction on Graphics journal’s special issue on the proceedings of the ACM SIGGRAPH 2015 conference.

The next step for the team will see them further developing the technology so that it can be applied in practice in visual effects and games pipelines for improving the realism of digital characters.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Colin Smith

Communications and Public Affairs