Should I trust my neural network?

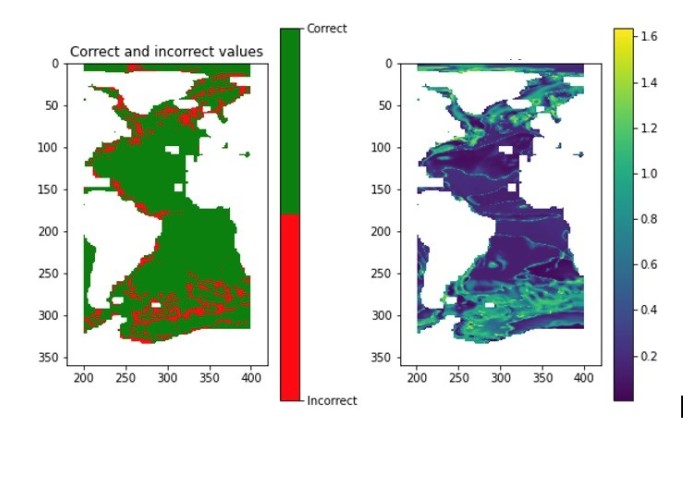

Figure 1: BNN results

Uncertainty in neural network predictions of ocean circulation.

In the coming decades, global heating will undoubtedly lead to changes in ocean circulation, potentially pushing the climate beyond key tipping points. Understanding these effects is vital for predicting the impact of climate change, and a recent IPCC Special Report highlighted this area as a key knowledge gap that needs to be addressed.

The problem of ocean circulation is ideally suited to machine learning techniques, such as neural networks, because of the wealth of ocean data available both from historical observations and model forecasts. However, the uncertainty in climate model predictions means that we require methods which can assess this uncertainty.

How much do you trust your results?

In a recent collaborative project between Mariana Clare (Imperial College London, UK); Redouane Lguensat and Julie Deshayes (LOCEAN, CNRS); and V. Balaji and Maike Sonnewald (Princeton, US), researchers explored how using a novel technique known as Bayesian Neural Network (BNN) might provide probabilistic predictions of ocean regimes.

This probabilistic prediction provides researchers with a way of assessing how much they should trust the result. They found that, generally, when the BNN predicts the correct regime, the neural network is also very certain. However, the opposite is also true, meaning that the network itself is telling the user that it is not sure about the regimes it got wrong. In essence the neural network is saying “I don’t know”.

This picture is reflected in Figure 1 (see top of article), which shows the BNN results. The left image shows accuracy, and the image on the right illustrates uncertainty measure - the higher the value, the more uncertain the result and the less the researchers should trust the BNN results.

Knowing when to say “I don’t know”

Knowing when to say “I don’t know” is an important skill for any model to have (or indeed any person!) BNNs, however, remain “black boxes”, which take data and output results with no explanation. When dealing with real-world physical problems such as ocean circulation, research gains considerable value if oceanographers can understand why the neural network has made its decision. Therefore, the project also explored and developed explainable AI techniques to better understand the skill of the network.

The collaboration between the machine learning experts and the oceanography experts in the team meant that they were able to confirm that the BNN’s decision-making processes are physically realistic. It also enhanced and confirmed the understanding of the oceanographers as to which features are most important in determining ocean regimes.

A successful collaboration

This successful collaboration illustrated how bringing together the skill and knowledge of researchers from two very different fields of science can advance research in both fields. It has also opened up interesting avenues for future research and future collaborations.

Thanks to the funders

This project was funded by the Ambassade de France au Royaume Uni.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Mariana Clare

Department of Earth Science & Engineering