Bendy robotic arm twisted into shape with help of augmented reality

Imperial researchers have designed a malleable robotic arm that can be guided into shape by a person using augmented reality (AR) goggles.

The flexible arm, which was designed and created at Imperial College London, can twist and turn in all directions, making it readily customisable for potential applications in manufacturing, spacecraft maintenance, and even injury rehabilitation.

In many ways it can be seen as a detached, bendier, third arm. It could help in many situations where an extra limb might come in handy and help to spread the workload. Alex Ranne and Angus Clark Department of Computing/Dyson School of Design Engineering

Instead of being constrained by rigid limbs and firm joints, the versatile arm is readily bendable into a wide variety of shapes. In practice, people working alongside the robot would manually bend the arm into the precise shape needed for each task, a level of flexibility made possible by the slippery layers of mylar sheets inside, which slide over one another and can lock into place. However, configuring the robot into specific shapes without guidance has proven to be difficult for users.

To enhance the robot’s user-friendliness, researchers at Imperial’s REDS (Robotic manipulation: Engineering, Design, and Science) Lab have

We’ve shown that AR can simplify working alongside our malleable robot. Dr Nicolas Rojas Dyson School of Design Engineering

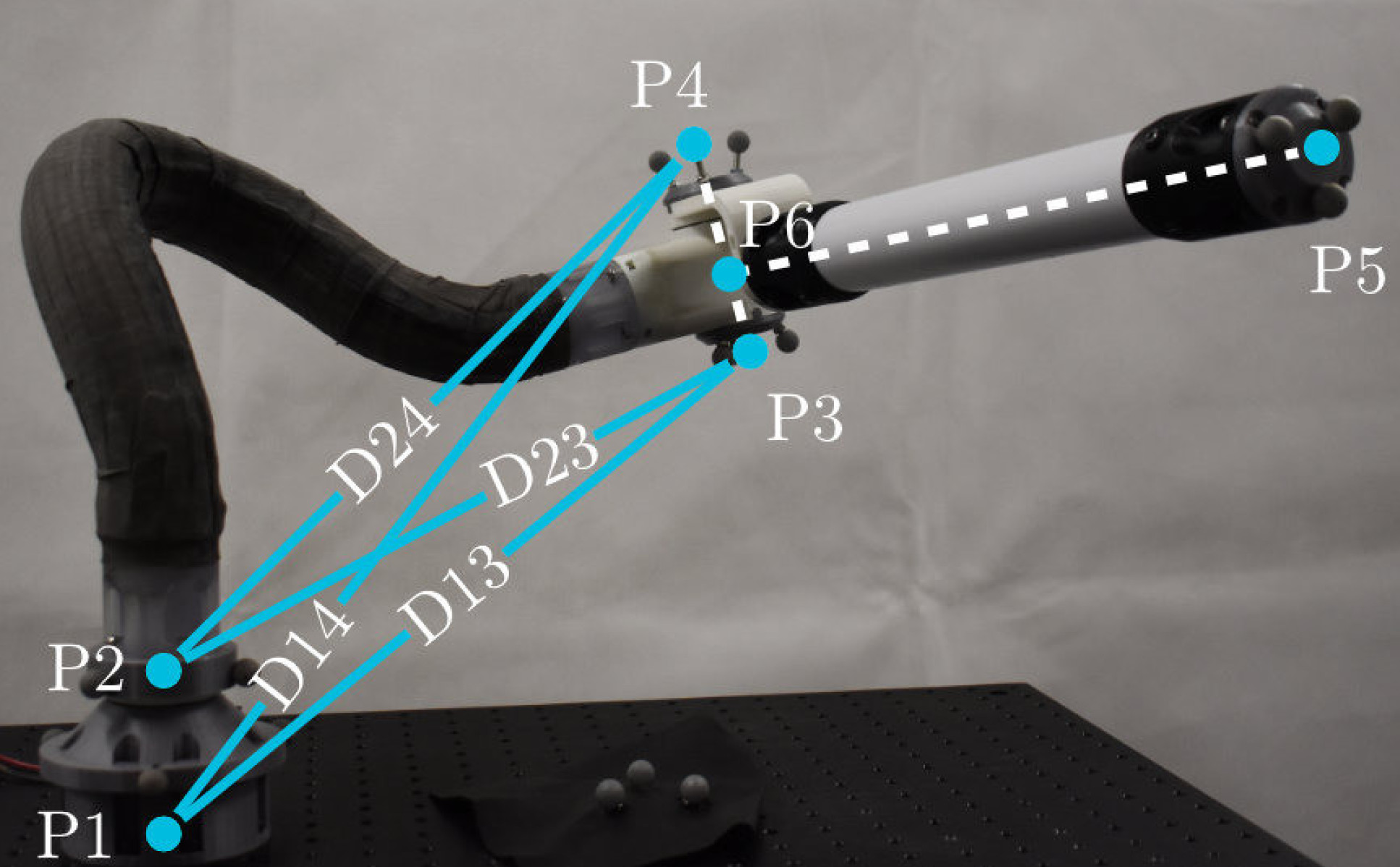

designed a system for users to see in AR how to configure their robot. Wearing mixed reality smart glasses and through motion tracking cameras, users see templates and designs in front of them superimposed onto their real-world environment. They then adjust the robotic arm until it matches the template, which turns green on successful configuration so that the robot can be locked into place.

Senior author of the paper Dr Nicolas Rojas, of Imperial’s Dyson School of Design Engineering, said: “One of the key issues in adjusting these robots is accuracy in their new position. We humans aren’t great at making sure the new position matches the template, which is why we looked to AR for help.

“We’ve shown that AR can simplify working alongside our malleable robot. The approach gives users a range of easy-to-create robot positions, for all sorts of applications, without needing so much technical expertise.”

The researchers tested the system on five men aged 20-26 with experience in robotics but no experience with manipulating malleable robots specifically. The subjects were able to adjust the robot accurately, and the results are published in IEEE Robotics & Automation Magazine.

Bent into shape

Potential applications include manufacturing, and building and vehicle maintenance. Because the arm is lightweight, it could also be used on spacecraft where every kilogram counts. It is also gentle enough that it could be used in injury rehabilitation, helping a patient perform an exercise while their physiotherapist performs another.

Co-first authors and PhD researchers Alex Ranne and Angus Clark, from the Department of Computing and Dyson School of Design Engineering respectively, said: “In many ways it can be seen as a detached, bendier, third arm. It could help in many situations where an extra limb might come in handy and help to spread the workload.”

The researchers are still in the process of perfecting the robot as well as its AR component. Next, they will look into introducing touch and audio elements to the AR to boost its accuracy in configuring the robot.

Although the pool of participants was narrow, the researchers say their initial findings show that AR could be a successful approach to adapting malleable robots following further testing and user training.

They are also looking into strengthening the robots. Although their flexibility and softness makes them easier to configure and maybe even safer to work alongside humans, they are less rigid while in the locked position, which could affect precision and accuracy.

“Augmented Reality-Assisted Reconfiguration and Workspace Visualization of Malleable Robots: Workspace Modification Through Holographic Guidance” by Alex Ranne, Angus Benedict Clark and Nicolas Rojas, published January 2022 in IEEE Robotics & Automation Magazine.

Images and video: REDS Lab, Imperial College London

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Caroline Brogan

Communications Division