Foundational Lectures

Our foundational lectures bring together experts from across Imperial to provide a unique introduction to core topics in robotics.

Foundational Lectures

Fundamentals of Kinematics, Dynamics & Actuation

Prof Ferdinando Rodriguez y Baena & Prof Thrishantha Nanayakkara

Fundamentals of Interaction, Sensing & Control

Dr Ad Spiers, Dr Enrico Franco & Prof Etienne Burdet

Fundamentals of Robot Learning

Dr Edward Johns & Dr Antoine Cully

Specialised Lectures

Our specialised lectures explore the latest research, key challenges, and emerging opportunities in diverse research fields, including sensing and control, surgical robotics, assistive robotics, aerial robotics and human-robot interaction. The full programme will be announced soon!

Apply to take part

We encourage applicants to submit their application as soon as possible, as places are limited and will be allocated on a first-come-first-served basis. Applications will be reviewed on a rolling basis, and we aim to respond with a decision within 3 weeks of the submission. Applications will close as soon as all places are filled.

Lab Tours

Our exclusive lab tours offer behind-the-scenes insights into the latest research tools and facilities. Options include the Hamlyn Centre for Robotic Surgery, the Aerial Robotics Lab, the MUlti-limb Virtual Environment, the Dyson Robotics Lab, and more. A full list of available lab tours will be released soon!

Group Projects

Our immersive, week-long group projects give you hands-on experience of robotics. Explore the list of projects below from last year’s summer school to get an overview of what you could do. The full list of 2026 group projects will be announced soon!

Group Projects

Intelligent Locomotion for Quadruped Robots

Adaptive & Intelligent Robotics Lab

This project uses learning algorithms to generate walking gaits for quadruped robots.

Conversational AI Engagement for Cognitive Disorders

Biomechatronics Lab

This project will involve the design and implementation of verbal human-robot interaction and machine learning analysis of speech to explore associations with cognitive function.

Understanding Artificial Haptic Sensing

Human Robotics Group

This project offers hands-on experience with artificial haptic sensors and multimodal tactile data.

Adaptive feedforward control of a drone

Insect Sensorimotor Control Lab

This project will involve the development, simulation, and implementation of an adaptive control method on a Parrot Mambo minidrone.

Biomimetic collision avoidance on a two-wheeled robot

Insect Sensorimotor Control Lab

This project involves assembling and programming a two-wheeled robot including a vision sensor (camera), processors and motors.

Human Inspired Robot Reaching Motions

Manipulation and Touch Lab

In this project you will use the ROAG dataset of 2.5K human reaching motions to determine a reaching controller for a desktop robot manipulator.

Soft inchworm robot for endoscopy

Mechatronics in Medicine

The projects aims to design and manufacture a soft inchworm robot intended to navigate a surgical phantom of the colon.

Knee exosuit with angle dependent damping

Morph Lab

In this project, you will design a wearable knee exo-suit with elastic material to provide an angle dependent impedance.

Robot Social Navigation: moving safely among people

Personal Robotics Lab

In this project, you'll implement a social navigation algorithm for a wheeled or legged robot.

Reinforcement Learning for Object Grasping

Robot Learning Lab

In this project, you will study how Reinforcement Learning can be used to train a robot to grasp objects.

Beating pulse simulator using soft Electrohydraulic actuation

Soft Robotic Transducers Lab

This project will give you hands-on experience on fabricating soft electrohydraulic actuators with XYZ motion system, applying G-code programming, and conductive patterning.

Small-scale mobile soft robot

Soft Robotics and Applied Control Lab

This project aims to develop a small-scale mobile soft robot using inflatable balloons and miniature pumps.

Programme Fee

The programme fee for the 2026 Robotics Summer School is £1500. This fee covers your full participation in the programme (including tuition, lunches, dinner on Wednesday 22nd, and a drinks reception on Friday 24th), but does not include accommodation or travel costs. Unfortunately, we are unable to offer scholarships this year.

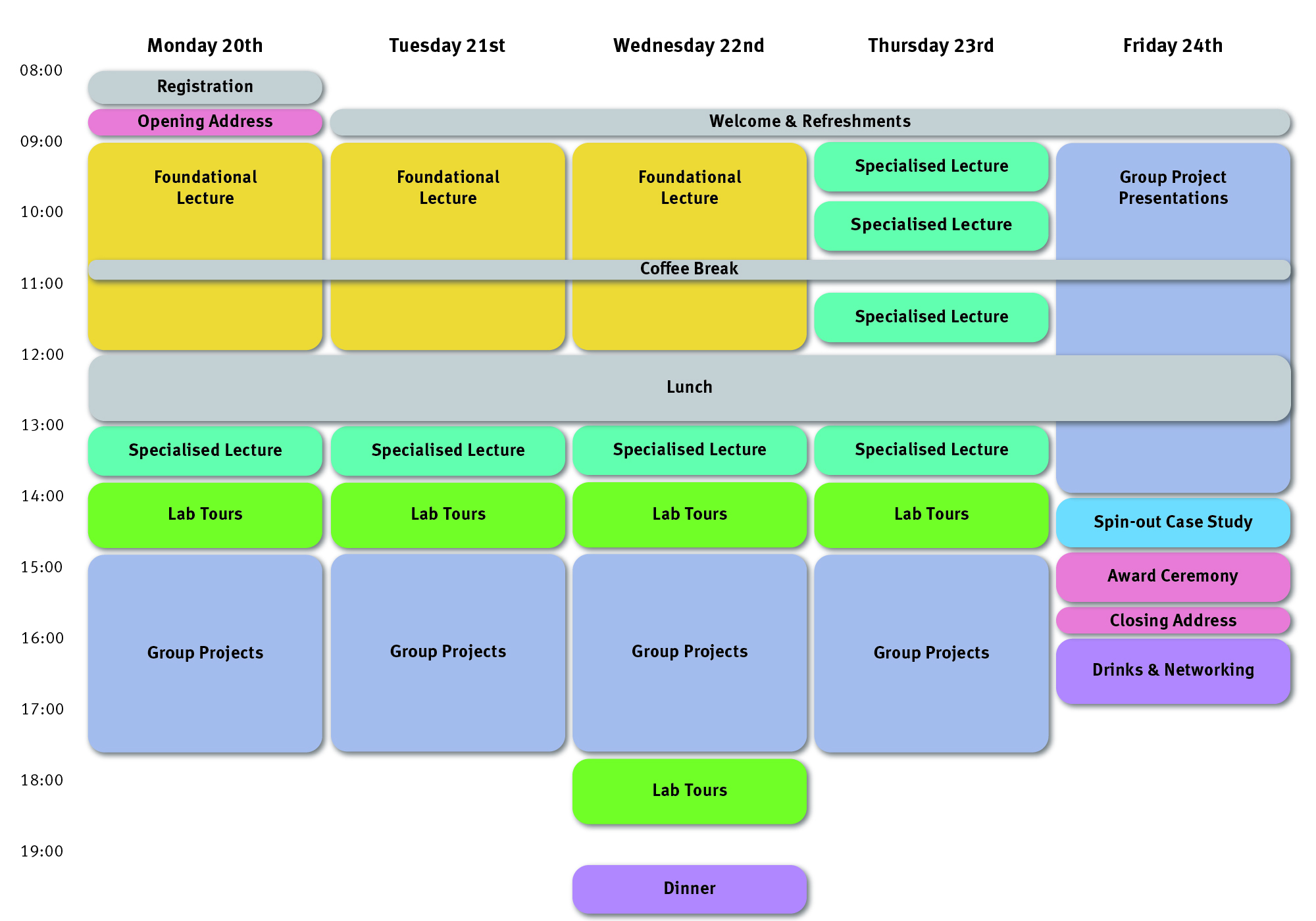

Schedule

Frequently Asked Questions

- How do I apply?

- Who can apply?

- What is the programme fee?

- Are scholarships available?

- Is the summer school accredited?

- Can I work whilst attending the summer school?

- Can I attend only part of the summer school, such as just the lectures?

- Is accommodation provided?

- What do I need to bring with me to the summer school?

- Will I receive feedback on my application?

- How do I secure my place?

To apply for our 2026 Summer School, please complete the short application form. We encourage applicants to apply as soon as possible, as places are limited and will be allocated on a first-come-first-served basis.

While we particularly welcome current and recent postgraduate students, early career researchers and industry professionals, we will consider all applications on an individual basis.

We completely understand that not everyone will be coming in with the same background or level of experience, and that’s okay. Check our eligibility guidelines to understand what type of experience will be beneficial for students attending the Summer School.

Please use the application form to tell us more about your career goals and how this experience might support your journey

The fee for the Imperial Robotics Summer School is £1500. Unfortunately, we are unable to offer scholarships this year.

As the Summer School is only in its second year and we are still establishing our financial position, we unfortunately cannot offer scholarships this year. However, this is something we hope to offer in the future.

No, the Imperial Robotics Summer School is not accredited and does not count towards a formal academic credit. However, we will provide all participants with a certificate of participation upon successful completion of the programme.

Due to the intensive nature of the programme, it is not recommended to undertake employment during the summer school.

The summer school is designed for full participation, including both the lecture series and group projects. Partial attendance is not permitted, as the programme is intended to provide a cohesive and immersive learning experience.

Accommodation is not provided. Participants can book accommodation directly through Imperial's Accommodation service, or arrange their own accommodation off-campus. Please see the accommodation section for more information.

You would need to bring your own laptop to ensure you can complete all aspects of the summer school.

Unfortunately, due to the high volume of applications we receive, we are unable to offer feedback to unsuccessful applicants.

We aim to notify successful applicants within 3 weeks of receiving their application. You will then be asked to register for the Summer School and pay the programme fee. Please note that your place is not secured until you have completed the registration form and made a payment.