New robot console announced

new robot console launched by the Hamlyn Centre

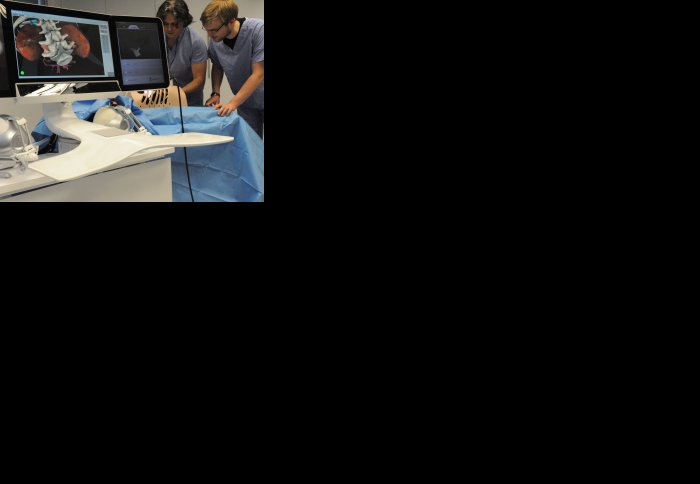

The Hamlyn Centre research team launched a new robot console at a recent EU FP7 project review meeting for the ARAKNES project.

At a recent EU FP7 project review meeting, the ARAKNES (Array of Robots Augmenting the KiNematics of Endoluminal Surgery) robot console was launched by the Hamlyn research team.

The ARAKNES project is part of the 7th Framework Programme of the European Commission and the Hamlyn Centre is responsible for the robot console design, system integration, as well as other research packages. The project is coordinated by Scuola Superiore Sant'Anna and other partners include Universita di Pisa (Italy), EPFL (Switzerland), MicroTech (Italy), ST Microelectronics (Italy), University of St Andrews (United Kingdom), Karl Storz (Germany), Novineon Healthcare Technology (Germany), University of Barcelona (Spain), and CNRS (France). The project is aimed at advancing the current endoscopic surgical procedures by allowing single-port, bi-manual tele-operation superior to that of laparoscopic surgical robots.

The ARAKNES platform is based on several robotic designs. One of which uses natural-orifice insertable untethered robotic modules. Positioning of the modules is achieved by means of extracorporeal magnets. This includes a magnetic levitation camera module. Wireless force feedback has also been investigated.

The clinical platform allows single port umbilical insertion of a bi-manual robotic mechanism providing for a total of 14 degrees-of-freedom. Additional degrees-of-freedom are provided through an external parallel robot positioner. On-board and panoramic stereoscopic visualisation is also provided.

The Hamlyn Centre has implemented our original concept of perceptual docking for robotic control. The master console is designed to enhance the surgeon’s interaction with the robot and provide effective control, guidance and visualization throughout the live or simulated interventional procedure. Some of the key features of the platform include:

- Versatile image guidance with augmented reality visualization (Invere Realism) making use of real-time 3D information recovered optically from the operating field in conjunction with preoperative and intraoperative medical imaging data;

- Integration of perceptual docking on the master console by using binocular eye tracking information to give surgeon a unique way of interacting with the robotic system and provide enhanced human-machine synergy;

- Improved console ergonomics to ensure confortable posture to suit the working style of different surgeons;

- Seamless integration with the robotic system and the bio-sensors used during minimally invasive surgery.

The system is currently undergoing intensive evaluation and in vivo trials ahead of the project completion in Oct 2012. Further details of the project can be found at www.araknes.org.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Press Office

Communications and Public Affairs

- Email: press.office@imperial.ac.uk