Imperial staff at the forefront of big data

Imperial has long pioneered the use of information technology in research. The recent rise of 'big data' is the latest example of this.

The rise of ‘big science’ endeavours, such as the particle smashing experiments at CERN in Geneva and the creation of biomedical databases, has created a tsunami of information worldwide that needs to be tamed and made sense of.

The College has been at the forefront of the use of information technology in the university sector since the birth of mainframe computing in the 1960s – and then a few decades later with the rise of the internet.

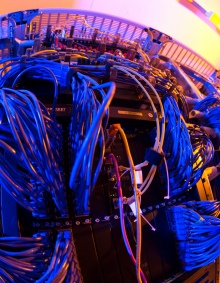

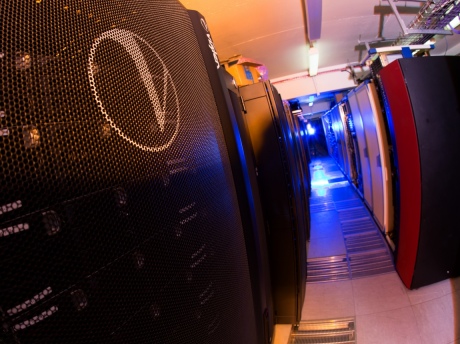

Today, the main Research Computing Facility in the Mechanical Engineering Building is a veritable treasure trove of discoveries and innovation, through its role in hosting the computing hardware of departments across College. Visiting it is quite a revelation. The endless rows of servers and terminals hum away loudly with cooling lifeblood, lights blinking intermittently. It feels almost organic – perhaps not a coincidence since it’s constantly being modified and resampled, giving birth to new insights.

Today, the main Research Computing Facility in the Mechanical Engineering Building is a veritable treasure trove of discoveries and innovation, through its role in hosting the computing hardware of departments across College. Visiting it is quite a revelation. The endless rows of servers and terminals hum away loudly with cooling lifeblood, lights blinking intermittently. It feels almost organic – perhaps not a coincidence since it’s constantly being modified and resampled, giving birth to new insights.

The Facility must keep pace with demand from academics who increasingly rely on advanced technologies like High Performance Computing, which involves clusters of computer processors all connected together to tackle long and complex scientific calculations and simulations.

Staff, under the direction of Computer Room Manager Steven Lawlor (ICT), must also ensure that it runs as energy-efficiently as possible; is secure from malicious intent; and that key systems have an uninterruptible power supply with large batteries in case of power outage.

Life banks

At the interface between ICT and active research is Lead Bioinformatician Dr James Abbott (Life Sciences), an academic who works at the College’s Bioinformatics Support Service, pictured left.

He collaborates with an assortment of researchers across the Faculties of Medicine and Natural Sciences on a diverse array of projects that includes sequences from genome analyses, clinical research data and images from light microscopy.

Although the term ‘post-genomic era’ has become commonplace since the completion of the human genome project in 2003, that’s far from the last word on genetics – there’s a whole biosphere of organisms still awaiting sequencing.

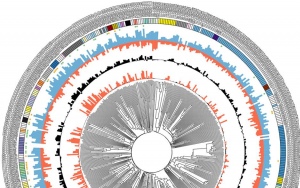

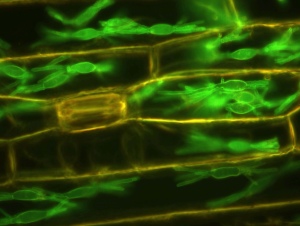

Indeed, James worked closely with Dr Pietro Spanu (Life Sciences) on decoding the genome of Blumeria graminis, a fungus that infects agricultural crops. Understanding more about this organism could have a big impact on crop yields and food security.

The genome of Blumeria graminis

“That was quite a challenging project because you’re working with these mixed samples of barley epidermal leaf peels, which comprise a layer of barley cells the fungus has infected. Around 10 per cent of the genetic material was fungal and about 90 per cent barley, so separating out the two was quite a computational challenge, because at the time, the genome of neither species was available,” says James.

Blumeria graminis (in green) infecting barley cells

There’s also much still to learn about the rich diversity of different human populations and the genetic basis of disease.

“That might involve taking a set of people with a condition and looking for commonalities – or alternatively focusing on a population in a defined geographic region, because people in a particular environment might evolve ways of better handling a condition.”

For James there’s also a strong sense of stewardship through taking responsibility for different projects and ensuring researchers across institutions can make best use of available resources.

People are trying to take a more holistic approach in examining how things work, rather than the old fashioned way of getting down to the very low level and measuring one thing carefully

– Dr James Abbott

Depending on privacy and sensitivity issues, project data may be made publicly available on the internet for the benefit of the wider scientific community. The challenge then lies in trying to pull together different databases in order to form the bigger picture.

“Particularly in the systems biology world, people are trying to take a more holistic approach in examining how things work, rather than the old fashioned way of getting down to the very low level and measuring one thing carefully – so for example, integrating gene expression data, metabolomics and proteomics.”

Once projects come to a close, the research councils now require data retention for 10 years, which, given the scale of data, can be an issue.

“We archive data to tapes when the projects have finished – two copies in a fire safe, on a different site physically removed from the Research Computing Facility.”

No sooner has one batch of projects been completed another starts. The Bioinformatics Support Service is currently working on a range of diverse new initiatives such the Chernobyl Tissue Bank, which examines the genetic make-up of tumour tissue from people affected by the nuclear fallout of 1986.

Model Earth

One area of research that is constantly hungry for more and more data is weather and climate science. Climate modelling, which tests ideas about how the climate has evolved and may change in the future, depends on the availability of accurate, long-term observational data from various sources.

The Space and Atmospheric Physics (SPAT) Group at Imperial has been a worldleader in this respect for decades now. Dr Richard Bantges (Physics) is a Scientific Project Manager at SPAT where he conducts his own research as well as performing a data stewardship role for the rest of the Group.

As Richard explains, this broadly falls into two separate spheres – Earth observation and computationally-intense outcome modelling for things like extreme weather prediction.

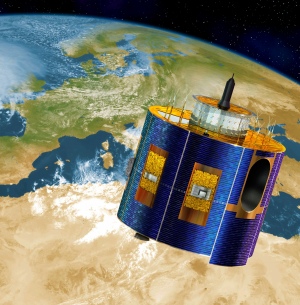

Incoming data generally comes from sensing equipment mounted on either meteorological aircraft, polar orbiting or geostationary satellites, the latter of which orbit in sync with the Earth and focus on a particular region.

Meteorological satellite

The TAFTS (Tropospheric Airborne Fourier Transform Spectrometer) instrument for example is mounted on aircraft operated by the Met Office and measures the far-infrared radiation emitted by the Earth’s atmosphere, thereby providing vital information about heating and cooling.

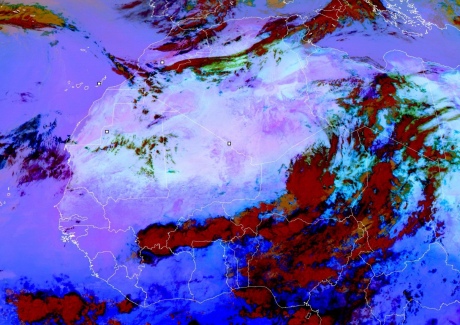

Meanwhile the Fennec project, which Dr Helen Brindley (Physics) is involved with, uses data from the European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT) satellite ‘Meteosat-10’ to assess the climatic impact of airborne dust over the Saharan region of Africa. Data is beamed down from the satellite to a receiver on the roof of the Huxley building then sent onwards to the Research Computing Facility for processing before being made available as images on the web which are used to identify dust storms in the region. During research campaigns, aircraft pilots use these quick look images to inform the where best to fly.

Visualisation of dust particle distribution in Saharan Africa

Some of the work of SPAT though requires considerably more post-processing – combining data from a range of sources such as the atmosphere, ocean, waves, land and sea-ice – something Professor Ralf Toumi’s group (Physics) is focused on.

“You just wouldn’t be able to do this sort of thing without the Research Computing Facility. Ralf’s team has hundreds of terabytes of output data and they need it in an environment where they can move it around and manipulate it, so it can be quickly analysed and visualised,” says Richard.

It’s about having that knowledge of how data is used and to translate between the scientific aspects and the technical side of things

– Dr Richard Bantges

Like James over in Bioinformatics, Richard also takes pride in the responsibility of maintaining and safeguarding data for his immediate research community.

“It’s about having that knowledge of how data is used and the vision for what the requirements will be and to translate between the scientific aspects and the technical side of things. I tend to liaise between the two and say to ICT – yes we’re going to need this amount of data storage, and we need to make sure we’ve got this bit of kit and the infrastructure to support it.”

Data future

James and Richard are but two examples in the College of a relatively new class of multiskilled team player who must combine a sound scientific grounding with complete IT savvy. It’s quite likely that this sort of role will become more and more important in the future in order to unlock the underlying value in big datasets – both from a purely scientific and commercial point of view. Indeed the College’s various industrial collaborators like Rolls Royce have come to rely on information hosted at the College.

‘It’s a numbers game’ might be a hackneyed sales cliché, but it is looking increasingly relevant to range of disciplines.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Andrew Czyzewski

Communications Division