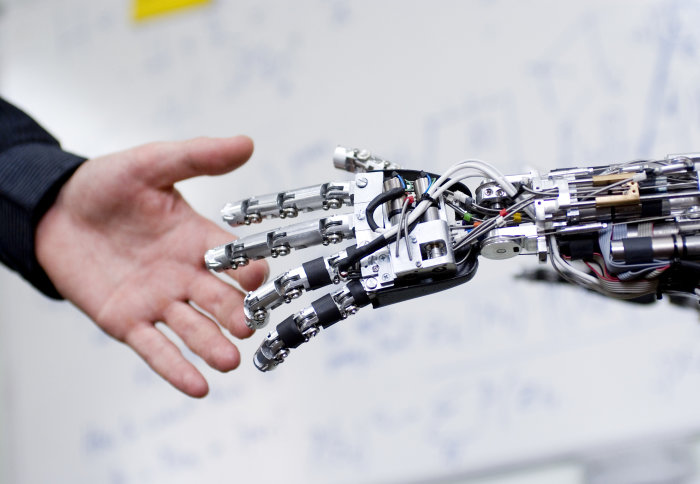

Imperial experts create robot helper to understand and respond to human movement

Researchers at Imperial have created a new robot controller using game theory, allowing the robot to learn when to assist a human.

The past decade has seen robots work increasingly with humans – for example in manufacturing, assistive devices for physically impaired individuals, and in surgery. However, robots cannot currently react in a personalised way to individual users, which limits their usefulness to humans.

Now, Professor Etienne Burdet from Imperial College London and colleagues have developed the first interactive robot controller to learn behaviour from the human user and use this to predict their next movements.

Reactive system

The researchers developed a reactive robotic programming system that lets a robot continuously learn the human user’s movements and adapt its own movements accordingly.

Professor Burdet, from Imperial’s Department of Bioengineering, said: “When observing how humans physically interact with each other, we found that they succeed very well through their unique ability to understand each other’s control. We applied a similar principle to design human-robot interaction.”

The research, conducted in collaboration with the University of Sussex and Nanyang Technological University in Singapore, is published in Nature Machine Intelligence.

Normally, humans can only control robots using quite simple techniques, through either the ‘master-slave’ mode, where the robot reproduces or amplifies the human’s movement, such as with an exoskeleton, or the robot does not consider the human user at all, such as in rehabilitation. Humans are unpredictable and constantly change their movements, which makes it difficult for robots to predict human behaviour and react in helpful ways. This can lead to errors in completing the task.

The research team set out to investigate how a contact robot should be controlled to provide a stable and appropriate response to a user with unknown behaviours during movements in activities such as sport training, physical rehabilitation or shared driving.

Game theory

The authors developed a robot controller based on game theory. Game theory consists of multiple players either competing or collaborating to complete a task. Each player has their own strategy (how they choose their next action based on the current state of the game) and all players try to optimise their performance, while assuming their opponents will also play optimally. To successfully apply game theory to their interaction, the researchers had to overcome the issue that the robot cannot predict the human’s intentions just by reasoning.

They used game theory to determine how the robot responds to the effects of interacting with a human, using the difference between its expected and actual motions to estimate the human’s strategy – how the human uses errors in the task to generate new actions. For example, if the human’s strategy does not allow them to complete the task, the robot can increase its effort to help them. Teaching the robot to predict the human’s strategy lets it change its own in response. If the robot can estimate the human’s strategy, it can change its own strategy in response.

The team tested their framework in simulations with human subjects, showing that the robot can adapt when the human’s strategy changes slowly, as if the human was recovering strength, and when the human’s strategy is changing and inconsistent, such as after injury.

Working in harmony

Lead author Dr Yanan Li from the University of Sussex, who conducted the work while at Imperial’s Department of Bioengineering, said: “It is still very early days in the development of robots and at present, those that are used in a working capacity are not intuitive enough to work closely and safely with human colleagues or clients without human supervision. By enabling the robot to identify the human user’s behaviour and exploiting game theory to let the robot optimally react to any human user, we have developed a system where robots can work in much better harmony with humans.”

Next, the team will apply the interactive control behaviour for robot-assisted neurorehabilitation with the collaborator at Nanyang Technological University in Singapore, and for shared driving in semi-autonomous vehicles.

The research was funded by the European Commission.

- - -

“Differential game theory for versatile physical human–robot interaction” by Y. Li, G. Carboni, F. Gonzalez, D. Campolo & E. Burdet, published 7th January 2019 in Nature Machine Intelligence.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Joanna Wilson

Communications Division