International Robotics Showcase 2019: Human-Robot Interaction in Space

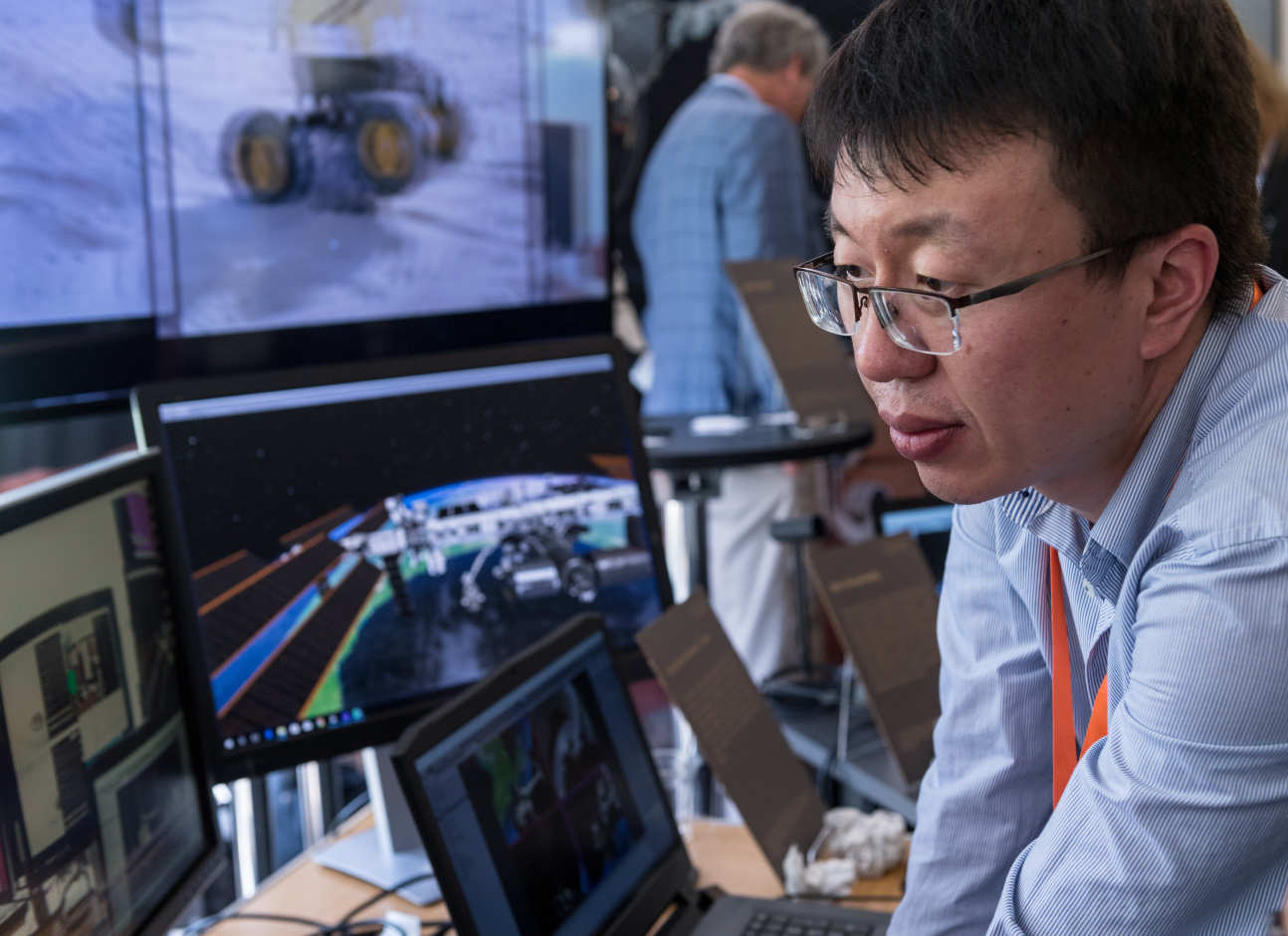

The Hamlyn Centre FAIR-SPACE research team showcased the demos of space innovative wearable, VR, operator-robot interaction and BCI during UKRW2019.

On 27thJune, right after the Hamlyn Symposium on Medical Robotics 2019, our FAIR-SPACE Research team at the Hamlyn Centre showcased a series of demos about Human-Robot Interaction at the International Robotics Showcase 2019, which was part of a series events of UK Robotics Week.

On 27thJune, right after the Hamlyn Symposium on Medical Robotics 2019, our FAIR-SPACE Research team at the Hamlyn Centre showcased a series of demos about Human-Robot Interaction at the International Robotics Showcase 2019, which was part of a series events of UK Robotics Week.

Space innovative wearable

One of the demos our FAIR-SPACE Research team demonstrated at the event was the sensing spacesuit.

To monitor astronaut’s physiological signals and enhance their musculoskeletal movements, our Hamlyn Centre’s research in low power, flexible bio-signal sensors, sensing algorithms using machine learning and assistive robotics could be incorporated into the spacesuit.

To monitor astronaut’s physiological signals and enhance their musculoskeletal movements, our Hamlyn Centre’s research in low power, flexible bio-signal sensors, sensing algorithms using machine learning and assistive robotics could be incorporated into the spacesuit.

As a spacesuit is multi-layered, we envision our technologies to be useful on at least two layers of the spacesuit: the innermost layer for monitoring of physiological signals and an assistive layer for musculoskeletal movements.

In addition to the garment, our work on brain computer interfaces and VR could be incorporated into the space helmet for better situation awareness.

Virtual reality (VR)

Virtual reality (VR) is one way to create simulated experiences. Given access to space is not common, VR could be very useful for training and designing for space. Indeed, NASA has a VR laboratory for training astronauts.

Virtual reality (VR) is one way to create simulated experiences. Given access to space is not common, VR could be very useful for training and designing for space. Indeed, NASA has a VR laboratory for training astronauts.

Hamlyn Centre’s research on linking the operation in a virtual International Space Station (ISS) to haptics feedback is the beginning of a test bed to develop models for multi-model HRI interactions.

The movie our research team showcased along with the interactive VR demo we let the visitors to experience is an invitation to the visitors to discuss the use of VR, multi-modal interactions and the challenges in designing for space.

Operator-Robot Interaction

In space, on-orbit operations are crucial for the construction and maintenance of platforms, such as the International Space Station (ISS), and for the tele-operation of the planetary rovers from the orbit. Human-robot interaction remains a crucial part of the assembly and servicing tasks, whilst tasks will be mostly performed by the robots due to the nature of the extreme environment.

In space, on-orbit operations are crucial for the construction and maintenance of platforms, such as the International Space Station (ISS), and for the tele-operation of the planetary rovers from the orbit. Human-robot interaction remains a crucial part of the assembly and servicing tasks, whilst tasks will be mostly performed by the robots due to the nature of the extreme environment.

In our demo, we transferred technologies that are currently being researched in tele-operated surgery to tele-operated space operations and explored the paradigm of shared autonomy. The technologies to be explored include the human-robot collaborative teleoperation system, haptic feedback, motion planning and control, and augmented reality.

Brain-Computer Interface (BCI)

Another highlight our FAIR-SPACE Research team brought into the showcase was the application of Brain-Computer Interface (BCI) in space environment. In order to monitor astronauts’ vital status, the Hamlyn Centre is developing technologies for understanding how the unusual environment of space affects cognitive state of the astronauts.

Another highlight our FAIR-SPACE Research team brought into the showcase was the application of Brain-Computer Interface (BCI) in space environment. In order to monitor astronauts’ vital status, the Hamlyn Centre is developing technologies for understanding how the unusual environment of space affects cognitive state of the astronauts.

We use a Brain-Computer Interface (BCI) to monitor the brain signals of astronauts and detect workload, attention focus, disorientation and motion sickness. These models could then be used to support human-robot interaction and team in space.

Future AI and Robotics for Space (FAIR-SPACE) Hub

The Future AI and Robotics for Space (FAIR-SPACE) Hub is a UK national centre of research excellence in space robotics and AI. As one of the FAIR-SPACE Hub's academic partners, the Hamlyn Centre research team is leading the ‘Human-Robot Interaction’ theme, which is set to demonstrate human-robot cooperation and collaboration for performing inspection tasks, applicable to both orbital and planetary space environments.

The research projects mainly focus on:

- Haptic tele-operation and intelligent motion: Retargeting of anthropomorphic systems; adaptation and mapping of feasible motions between the operator and the anthropomorphic/dual-arm systems; software optimisation safety layer to handle physical constraints.

- Wearable astronaut-robot proximal interaction technology: Create a Creation of human-robot interface technology that can be used to control space robotics during physically demanding scenarios such as for spacewalk scenarios and performing inter-vehicular maintenance at ISS.

- Combined Augmented Reality/Virtual Reality for space environments: Create a Creation of human-robot interface technology that can be used to control space robotics during physically demanding scenarios such as for spacewalk scenarios and performing inter-vehicular maintenance at ISS.

For more information about the FAIR-SPACE Hub, please visit their official website.

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Erh-Ya (Asa) Tsui

Enterprise