Proposed mechanisms to detect illegal content can be easily evaded, study finds

by Gemma Ralton

Data scientists have shown that current mechanisms to detect illegal content proposed by governments, tech companies and researchers are not robust.

A team of data scientists from Imperial’s Data Science Institute (DSI) and the Department of Computing have demonstrated that current mechanisms of detecting illegal content, known as perceptual hashing, do not work sufficiently and could be easily bypassed by illegal attackers online who aim to evade detection.

In the study, published in 31st USENIX Security Symposium, held this week in Boston, the team including the DSI’s Shubham Jain, Ana-Maria Cretu, and Dr Yves-Alexandre de Montjoye showed that 99.9% of images were able to successful bypass the system undetected whilst preserving the content of the image.

“Our results shed serious doubts on the robustness of perceptual hashing and scanning mechanisms currently proposed by governments and researchers around the world." Shubham Jain Co-Author

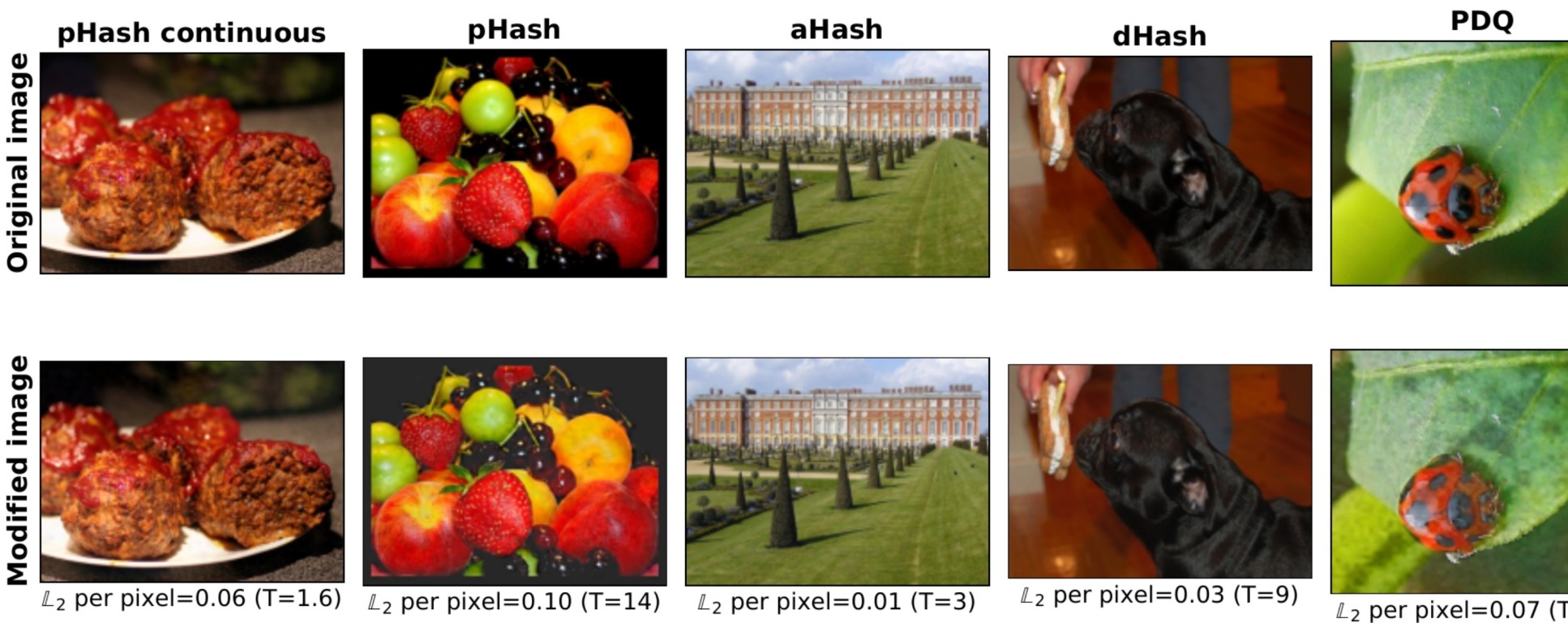

Through a large-scale evaluation of five commonly used algorithms for detecting illegal content, including the PDQ algorithm developed by Facebook, the team showed that modified images are able to avoid detection whilst still maintaining very visually similar images.

Co-Author Shubham Jain said: “Our results shed serious doubts on the robustness of perceptual hashing and scanning mechanisms currently proposed by governments and researchers around the world.” Robustness in computer science is the ability of a computer to cope with errors or unexpected input.

Detecting illegal content online

Currently, messaging platforms such as WhatsApp use a process called end-to-end encryption which enables people to securely and privately communicate with one another.

Governments and law enforcement agencies have however raised concerns that illegal content might now be shared undetected.

A mechanism of scanning data known as client-side scanning has been recently proposed by tech companies and governments as a solution to detect illegal content in end-to-end encryption communications. Client-side scanning broadly refers to systems that scan message contents such as images, text and videos, for matches against a database of previously known illegal content, before the message is encrypted and sent to the intended recipient.

However, it has received a significant backlash from the privacy community, raising concerns that the system of client-side scanning could result in privacy breaches for surveillance purposes.

Moreover, the creation of the UK Online Safety Bill and the EU Digital Services Act package has suggested having mandatory illegal content detection on large messaging platforms, but this assumes that the existing perceptual hashing-based client-side scanning systems would work.

Digital fingerprints

Perceptual hashing is a type of client-side scanning that uses a fingerprinting algorithm to produce a signature of an image using numbers. It is designed to generate signatures that remain robust to slight image modifications like rotation or rescaling.

This numerical signature of an image, or perceptual hash, can be compared to other signatures of existing illegal content, stored in a large database that is continually updated. It is however impossible to retrieve the image from the perceptual hash.

The detection system compares the two hashes and the image is flagged as illegal content if numbers are significantly similar and less than a predefined threshold.

Jain said: “By design, perceptual fingerprints change slightly with a small change in the image, something which, we showed, makes them intrinsically vulnerable to attacks. For this reason, we do not believe these algorithms to be ready and deployed for general use.”

False positives

In the study, the team suggested that attempting to solve the problem by increasing the threshold would be an ineffective and impractical solution.

The larger the threshold, the more modified the image and therefore the less likely the illegal content would be shared. However, the team found that increasing the threshold would detect too many false images, with one in every three detections a false positive, creating over one billion images wrongly flagged everyday.

Co-Author Ana-Maria Cretu explains: “It is important to keep the number of false positives to a minimum as too many could overwhelm the system and also there begins to be risks to individual privacy as each false positive has to be decrypted and shared with a third party for verification.”

The team also demonstrated that an attacker can create many different modified variations of one image whose fingerprints are very different from that of the original image and therefore adding image variations to the database of illegal content is unlikely to be effective.

Moving forward

Currently, tech companies like Apple have proposed to use perceptual hashing algorithms based on deep learning called deep hashing. Recently, the team showed their attack to be very effective against deep hashing as well. They applied the same attack to the winning model of Facebook’s Image Similarity Challenge 2021, a deep hashing algorithm designed to detect image manipulations.

The team are presenting their work at the 31st USENIX Security Symposium on 10-12 August 2022 in Boston where they will disseminate their results to the broader academic community. The symposium brings together researchers, practitioners, system administrators, system programmers, and others interested in the latest advances in the security and privacy of computer systems and networks.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Gemma Ralton

Faculty of Engineering