Environment and Listener-Optimised Speech Processing for Hearing Enhancement in Real Situations (ELO-SPHERES)

Researchers: Patrick Naylor, Mike Brookes, Alastair Moore, Rebecca Vos, Sina Hafezi, Mark Huckvale (UCL), Stuart Rosen (UCL), Tim Green (UCL), Gaston Hilkhuysen (UCL).

See the project website here.

Age-related hearing loss affects over half the UK population aged over 60, and the most common treatment for mild-to-moderate hearing loss is the use of hearing aids. However, many hearing aid users find that speech is more difficult to understand in noisy environments, and that their hearing aids are not good enough at suppressing noise, and adapting to changing situations, so they therefore do not use their hearing aids all the time.

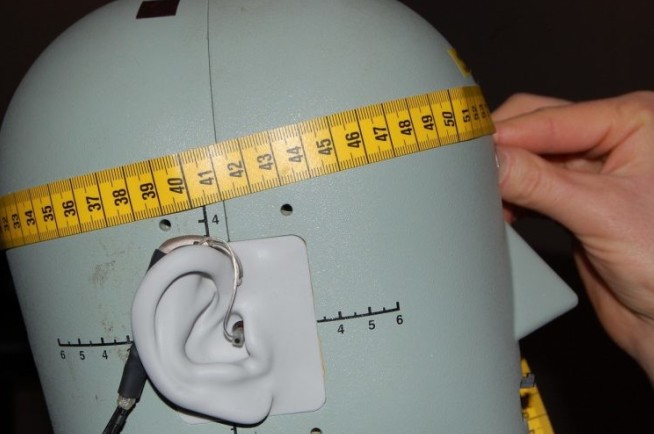

Recent technological advances have made it possible for a pair of hearing aids to share information in almost real-time via a wireless data link. Listening binaurally (with two ears) allows speech and noise to be separated based on their spatial locations resulting in improved intelligibility, and it is the goal of this project to exploit these binaural advantages by developing speech enhancement algorithms that jointly enhance the speech received at the two ears. A range of algorithms such as amplitude compression, noise reduction or beamforming can also be used within hearing aids to enhance speech, localize sound sources more accurately and ensure that the spatial information present in the acoustic signals is retained.

This work builds on a number of recent technological advances: in signal processing for estimating the direction of arrival of sounds or the relative transfer function between sound source and microphones; in pattern classification systems for identifying the types of sound source; in signal enhancement methods for separating wanted from unwanted information; and on recent work (performed in E-LOBES) for characterising the spatial audio abilities of HI listeners and modelling binaural speech intelligibility in noise using signal-based metrics. The project will also build on advances in Virtual Reality; headsets are now able to render immersive stereoscopic video in combination with spatial audio processing.

The project will involve investigating and characterising different environments to understand the types of noise that reduce speech intelligibility and which strategies can best be used to improve intelligibility. It will also use virtual reality to design new hearing tests to more realistically measure a listener’s spatial listening ability, and the effects of visual information, and to simulate the effects of hearing aids and hearing impairment.

These aspects will be combined to produce an audio processing system which adapts in real time to the situation, using knowledge of the acoustic environment and the listener’s abilities to select the most appropriate speech enhancement techniques to optimise the fitting of binaural hearing aids for the hearing-impaired listener.

Relevant publications from SAP group:

- AH. Moore, RR. Vos, PA. Naylor, M Brookes: Evaluation of the Performance of a Model-Based Adaptive Beamformer. In: Speech in Noise Workshop, Toulouse, 2020.

- AH. Moore, JM. de Haan, MS. Pedersen, PA. Naylor, M. Brookes, J. Jensen: Personalized signal-independent beamforming for binaural hearing aids. In: The Journal of the Acoustical Society of America, 145 (5), pp. 2971-2981, 2019.

- AH. Moore, W. Xue, M. Brookes,, PA. Naylor: Estimation of the noise covariance matrix for rotating sensor arrays. In: In Proc. Asilomar Conf. Signals, Systems and Computers, USA, 2018.

- M. Huckvale, G. Hilkhuysen: On the predictability of the intelligibility of speech to hearing impaired listeners. In: In Proc Intl Wkshp on Challenges in Hearing Assistive Technology (CHAT), Stockholm, Sweden, 2017.

- L. Lightburn, M. Brookes: A Weighted STOI Intelligibility Metric based on Mutual Information. In: Proc. IEEE Intl. Conf. on Acoustics, Speech and Signal Processing (ICASSP), 2016.

Contact us

Address

Speech and Audio Processing Lab

CSP Group, EEE Department

Imperial College London

Exhibition Road, London, SW7 2AZ, United Kingdom