Modelling and tools for solving the models are the engines of Process Systems Engineering. As the economies around the world are becoming more inter-connected, the amount of the scientific and engineering data generated is skyrocketing and society demands a cleaner physical environment and better healthcare, we are ending up with having to build and solve very complex models. The complexity continues to grow in size on one hand and in terms of non-linearity, non-convexity, combinatorial nature and uncertain parameters on the other. We are not only being expected to be able to solve these models fast (speed) but also have to ensure that the solution obtained is reliable (reliability); speed and reliability often conflict each other. The highlighted developments reported below aim to address these issues.

OPTICO Project: Model-Based Optimization & Control for Process-Intensification in Chemical and Biopharmaceutical Systems

Ioana Nascu, Richard Oberdieck, Maria Papathanasiou, Stratos Pistikopoulos, Sakis Mantalaris; FP7 EU Commission

Within this scope, four specific processes (Chromatography, Polymerization, Crystallization, Oxidation) are examined in detail; the main focus thereby lies on the formulation of mathematical models of the processes as well as the identification of the bottlenecks of the process which limit its efficiency. Additionally, advanced control strategies are developed in order to enable a robust operation of the process which in return will enable the fulfilment of legal regulations while maintaining high process efficiency.

In collaboration with ETH Zurich and the ChromaCon AG, the Sargent Centre mainly focuses on the optimization and control of a specific chromatographic system, the Multicolumn Solvent Gradient Purification (MCSGP) process. Due to its periodic and highly nonlinear behaviour, as well as, the limited information available during operation, all model-based control strategies for this system have been unsuccessful so far. Using a multi-parametric programming approach we are aiming to develop not only a theoretical but also a practical approach to tackle this challenging class of problems. On the theoretical side Ioana Nascu is devising new tools and algorithms for state estimation, while Richard Oberdieck focuses on the solution of the multiparametric optimization problem. On the practical side, Maria Papathanasiou uses system identification and model reduction techniques to recast the original model into a simpler form which then can be solved using the multi-parametric framework.

Modelling, Optimisation and Explicit Model Predictive Control of Anaesthesia Drug Delivery Systems

Alexandra Krieger, Stratos Pistikopoulos; European Research Council, MOBILE, ERC Advanced Grant, No: 226462

Closed-loop model predictive control strategies for anaesthesia are aiming to improve patient’s safety and to fine-tune drug delivery, routinely performed by the anaesthetist. Towards this aim, the projects’ objectives were (i) the development of a mathematical model for drug distribution and drug effect of volatile anaesthesia and (ii) model predictive control strategies for depth of anaesthesia control based on the derived model. An individualised physiologically based model for drug distribution and uptake described by the pharmacokinetics was derived. The pharmacokinetic model was adjusted to the weight, height, gender and age of the patient. The pharmacodynamic model, describing the drug effect, links the hypnotic depth measured by the Bispectral index (BIS), to the arterial concentration by an artificial effect site compartment and the Hill-equation. The individualised pharmacokinetic and pharmacodynamic variables and parameters were analysed with respect to their influence on the measurable outputs, the end-tidal concentration and the BIS. The validation of the model, performed with clinical data for isoflurane and desflurane based anaesthesia, showed a good prediction of the drug uptake, while the pharmacodynamic parameters were individually estimated for each patient.

In a consecutive step, the control strategy was derived. The control design combines a linear multi-parametric model predictive controller and a state estimator. The non-measurable tissue and blood concentrations were estimated based on the end-tidal concentration of the volatile anaesthetic. The designed controller adapts to the individual patient’s dynamics based on measured data. An alternative approach uses the on-line parameter estimation of the patient’s sensitivity solving a least squares parameter estimation problem to adapt the controller to the patient.

Multiscale Modelling of Molecules Interacting with Biosubstrate Systems

Jan Marzinek, Sakis Mantalaris, Stratos Pistikopoulos; MULTIMOD ITN, EC’s Seventh Framework Programme, FP7/2007-2013 – Grant Agreement No 238013

The binding free energy is one of the most important and desired thermodynamic properties in simulations of biological systems. The propensity of small molecules binding to macromolecules of human bio-substrates regulates their sub-cellular disposition. This subject is fundamental in transdermal permeation and hair absorption of cosmetic actives. Biomechanical and biophysical properties of hair and skin are related to keratin as their major constituent. A key challenge lies in predicting molecular and thermodynamic basis as the result of small molecules interacting with alpha helical keratin at the molecular level. In addition, elastic properties of human skin which are directly related to the interactions of keratin intermediate filaments remain a challenging subject.

Molecular dynamics (MD) simulations provide a possibility of observing biological processes within atomistic resolution providing more detailed insight into experimental results. However, MD simulations are limited in terms of the achievable time scales. Hence, in this work MD simulations were employed in order to provide better understanding of the experimental results conducted in parallel and to overcome the main limiting factor of MD – the simulation time. For this purpose, thermodynamic and detailed structural basis have been delivered for small molecules interacting with keratin explaining and validating experimental data. On the top of this, the fast free energy prediction tool has been built within all-atom force field by a use of steered molecular dynamics alone. Within the coarse grain approach, the force field was developed for the application of elastic properties of human skin enabling orders of magnitude faster than all atom force fields simulations. The application of the force field, with water included within tabulated potentials enabled assessing the influence of the natural moisturizing factor composed of small molecules on the elastic properties of the outermost human skin layer. In this work, MD results reached excellent agreement with the experimental data. Population balance modelling in cell culture systems (David García Münzer, Sakis Mantalaris, Stratos Pistikopoulos; MULTIMOD Training Network, European Commission, FP7/2007-2013)

The objective of the proposed research is the development of a framework, experimental and mathematical, that facilitates the study of mammalian cell cultures as a sum of subpopulations with individual growth/metabolic and productivity characteristics.

Closing the loop from in silico to in vivo: modelling and optimisation of bacterial cell culture systems

Argyro Tsipa, Sakis Mantalaris, Stratos Pistikopoulos; MULTIMOD Training Network, European Commission, FP7/2007-2013

An important aspect in industrial bioprocesses is the capture of the biomass growth and substrate degradation via growth kinetic models. However, the most widely used models are empirical and unstructured. The solution for better models describing the cell growth, productivity and metabolism could be a combination of upstream and downstream events. In particular, when a substrate is induced in a cell culture, specific genes are expressed leading to enzymes production. These enzymes are the catalysts of the substrate degradation and biomass growth. Therefore, it is worthy studying the effect of gene expression in growth kinetic models. This concept is studied in the bacterium Pseudomonas Putida mt-2, which is a metabolically versatile soil bacterium able to degrade aromatic pollutants such as m-xylene and toluene. Pseudomonas Putida mt-2 harbours the TOL plasmid (pWW0). TOL plasmid is a paradigm of specific and global gene regulation and helps in the degradation of the same environmental pollutants as well. This idea was first developed in 2011 by Koutinas et al. using m-xylene as substrate, which triggers the TOL plasmid metabolic pathway, resulting to a hybrid growth kinetic model. In our project we extend this idea by using toluene as substrate which we observed that it triggers both the TOL plasmid and chromosomal pathways. Therefore by adding more genetic information and obtaining a clearer genetic map of the biosystem, the resulting hybrid kinetic model can become more accurate, efficient with increased predicting capability. As a result, a new approach starts to be applied in growth kinetic modelling. However the ultimate goal of this project is the development of a hybrid kinetic model in double substrate, which is usually the case in waste disposal, combining the microscale and macroscale level in cell cultures which will be a new proposal in research and we work towards this direction. This new proposal could lead to the optimisation of bioprocesses by reducing the cost and the labour time of the process and increasing the productivity and efficacy.

New Thermoplastic Composite Performs for wavy Structural Products

N. Maragos, A. Bismarck, S. Pistikopoulos, H. Boehm

A novel production method to obtain anisotropic fibre reinforced polymer composite shells with sinuous corrugations is reported that may be incorporated in the production of structural products, such as skin-stiffened honeycomb. The method is based on thermal processing of carbon fibre reinforced thermoplastic matrix composite laminates due to locked-in residual stresses by virtue of the lamina material anisotropy and layup heterogeneity. The design space of linearly connected (tessellated) asymmetric [0n/90n], n ∈ Z+ laminates in the planform was explored to produce unique or multiple equilibrium shapes subject to boundary conditions and layup of adjacent unit laminates.

Important tessellations are reported based on the repeating unit cell (RUC) layup formed of the [0/90] and [90/0] unit laminates connected uniaxially i.e. along axis x that coincides with the local 00-ply principal material coordinate axis. The aim is to shed light on the tessellated laminates processability using different composite production methods by implication of the selection of appropriate thermoplastic composite precursors and preform designs.

An optimization problem is proposed via a surrogate model fitting to a DOE setup.Design of Tractable MPC and NCO-Tracking Controllers using Parametric Programming

Muxin Sun, Stratos Pistikopoulos, Benoit Chachuat

The optimization of dynamic processes has received great attention in recent years for the need of reducing economic cost, improving system performance as well as satisfying corresponding constraints. Due to the uncertainty stemming from model mismatch and process disturbances, robust optimization or measurementbased optimization method needs to be applied. Traditional method such as model predictive control and dynamic real-time optimization will result in a lot of computation effort due to the repetitive solving optimization problem online. In contrast, multiparametric programming allows determining the optimal solution of an optimization problem as a function of parameters, instead of repeatedly solving online optimization problem. Thus online control problem can be computed efficiently by substituting the explicit solution mapping for the optimization problem. The measuredbased method of NCO tracking enforces optimality by directly tracking the necessary conditions of optimality in the presence of uncertainty, transforming a dynamic optimization problem into a feedback control problem. However, the multi-parametric approach has not yet been incorporated into the NCO-tracking method. The current research is to further develop and unify the theories and algorithms of mp-MPC and NCO-tracking and exploit techniques for nonlinear systems to make guarantees on optimality loss. An integrated theory of optimization and tailored algorithms will be developed by casting NCO-tracking as a multi-parametric dynamic optimization problem.

Global Sensitivity Analysis and Metamodelling of Nonlinear Models

Sergei Kucherenko, Nilay Shah

Modern industrial models contain a large number of parameters and are often highly non-linear. They also have large uncertainty ranges for the parameters and are computationally expensive to run. Traditional methods for uncertainty and sensitivity analysis are not suitable due to their computational expense, local nature and the difficulty in interpreting the results. Global sensitivity analysis evaluates the effect of an uncertain input while all other inputs are varied as well. It accounts for interactions between variables and the results do not depend on the stipulation of a nominal point. It can be used to identify key parameters whose uncertainty most strongly affects the output; rank variables in order of importance; fix unessential variables and reduce model complexity; identify functional dependencies; analyze efficiencies of numerical schemes. We developed new advanced GSA methods ranging from improved estimates the variance based global sensitivity measures (the so-called Sobol’ sensitivity indices) to new derivative based global sensitivity measures which are ideal for screening and model reduction.

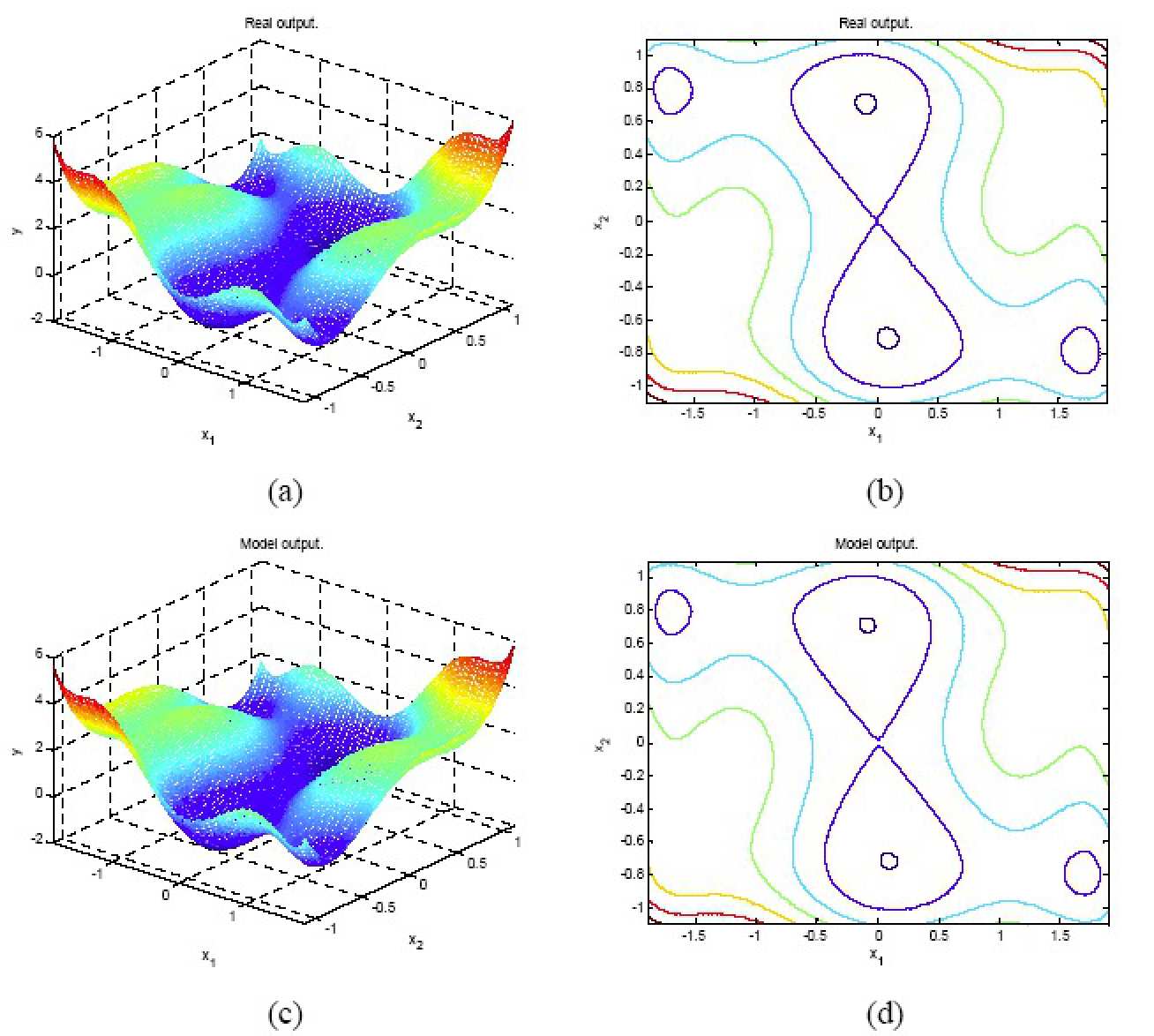

A metamodel is a mathematical function which approximates the outputs of the model with a neglgible CPU cost, which allows the user to make new output predictions with a good accuracy. It can be used to replace complex expensive explicit or black-box models which needs to be run on-line; for low cost screening and global sensitivity analysis; to replace expensive objective functions in global optimization. etc.

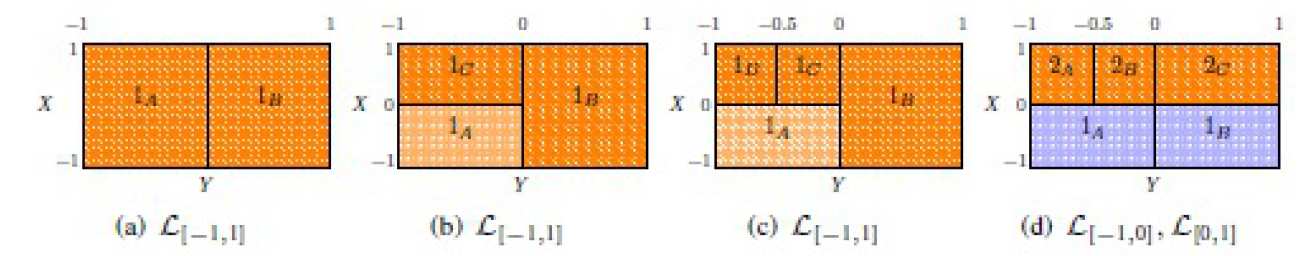

We have developed an efficient approach applying Quasi Random Sampling with Sobol’ sequences for building High Dimensional Model Representation (HDMR) models for models with independent and dependent inputs. We also constructed high-dimensional Sobol’ sequence generators with additional uniformity properties (Sobol’ sequences are widely used for high dimensional integration, experiment design, sampling in problem of global optimization, etc). The Quasi Random Sampling-High Dimensional Model Representation (QRS-HDMR) method exploits the fact that for many practical problems only low order interactions of the input variables are important. It can dramatically reduce the computational time for modeling such systems. The QRS HDMR approach can be used for building meta-models with significantly reduced complexity in comparison with original models. This method also provides the values of the Sobol’ sensitivity indices at no extra costs.

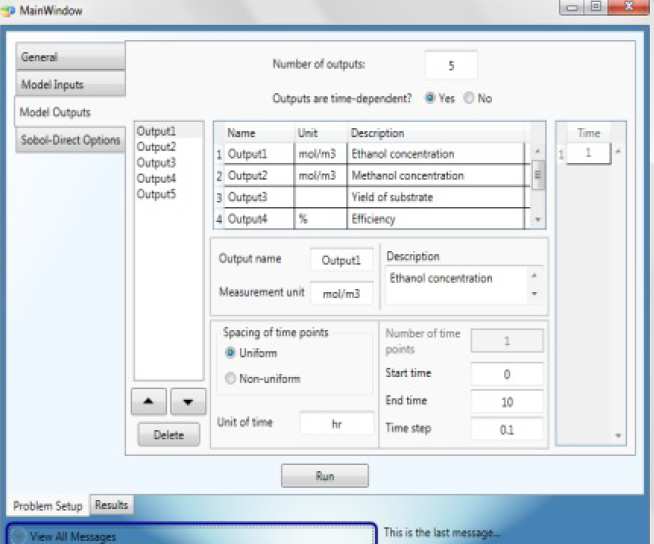

We developed a general purpose metamodeling software package called SobolHDMR. It is based on a number of metamodeling techniques including QRS-HDMR, the Radial Basis Function method and kriging. SobolHDMR can be used to construct metamodels either from explicitly known models or directly from data given by “black-box” models. Methods implemented in SobolHDMR can deal with models with independent and dependent input variables. SobolHDMR can be applied to both static and time-dependent problems. SobolHDMR can also be used for global sensitivity analysis. The set of available global sensitivity analysis techniques include variance and derivative based sensitivity measures. The software has a user friendly GUI interface for inputs and presenting results (Fig. 1). It can be linked to MATLAB and other packages. The produced metamodels are given as self-contained C++ or MATLAB files.

Stochastic methods for global optimization and Metamodelbased global optimization

Sergei Kucherenko, Nilay Shah

The solution of nonconvex global optimization problems is one of the hardest fields of optimization. It presents many challenges in both practical and theoretical aspects. There are two types of commonly used techniques for solving such problems: deterministic and stochastic. Deterministic methods guarantee convergence to a global solution. However, for large-scale problems these methods may require a large CPU-time. Stochastic search methods yield an asymptotic (in a limit N going to infinity, where N is a number of randomly sampled points) guarantee of convergence. In reality problems can only be solved with limited number of points N, hence convergence to a global solution is not guaranteed. However, stochastic (although known as heuristic) methods have already shown their efficiency and usefulness in solving large scale practical problems.

We developed a novel method, which combines the advantages of deterministic and stochastic methods. It is based on application of Quasi Monte Carlo (QMC) sampling methods (Sobol’ sequences) and multi-level linkage methods (MLSL). In comparison with pure stochastic methods employing random numbers, application of QMC sampling significantly decreases the number of points required to achieve the same tolerance of finding the global minimum. The MLSL method consists of three stages: I. Global: an objective function is evaluated on a set of sampled points. Objective: to obtain as much information as possible about the underlying problem with a minimum number of sampled points; II. Local: a deterministic local search method is applied to selected points. Objective: to solve a local constrained minimization problem; III. Multilevel: the clustering technique and stopping criteria are used. Objective: To find all global and local minima without finding the same local minima more than once.

We developed a general purpose global optimization solver SobolOpt based on the developed method. It is linked with modeling systems GAMS and MATLAB. The solver is capable of solving complex constrained global optimization problems with continuous or mixed-integer variables.

Optimization of complex products or processes can be very demanding from a computational point of view. In many cases there is a need for simplified models that could provide an accurate representation of the detailed and costly objective functions and/or set of constraints. These simplified models known as metamodels can be orders of magnitude cheaper to evaluate compared to the original models. Such an approach is also invaluable in cases when the model is not given explicitly but it exists only in a form of inputoutput maps or it is given as a “black box”. Another advantage of using metamodel-based design optimization is that building metamodels may filter physical high frequency and numerical noise which can improve the quality of the optimization results. We showed that the global metamodelling approach based on the QRS – HDMR method is capable of dealing with high dimensional, multimodal problems of low or moderate complexity and its performance can be further enhanced by combining it with other metamodelling techniques such as Radial Basis Functions and kriging.

The Branch-and-Sandwich algorithm for bilevel optimisation

Polyxeni-Margarita Kleniati, Claire S. Adjiman

In many contexts, decision-making involves reconciling different objectives or the needs of different entities. For example, in production planning, one may aim to manufacture a series of products that come as close as possible to specified quality targets, with the overarching objective of minimising production costs. Alternatively, in the context of thermodynamic model development, the objective is to find a set of parameters that yield the best possible match between model and experimental data. The evaluation of the model performance often requires the determination of stable equilibrium phases, which entails the solution of Gibbs free energy minimisation(s). Problems of this type thus involve one or more optimisation problems embedded within a higher-level optimisation problem; they fall within the class of bilevel optimisation problems.

This class of problems is extremely challenging, especially when the functions involved are nonlinear and when integer variables are present, as is often the case in process systems engineering applications. Over the past few years, we have been developing an algorithm to address this class of problems: the Branch-and- Sandwich algorithm, which has recently been published in the Journal of Global Optimization, is guaranteed to identify correctly the global solution of nonlinear bilevel problems.

The incorporation of integer variables within the algorithmic framework has also been achieved. The algorithm is based on branch-and-bound framework in which an unusual branching scheme is introduced. This allows the simultaneous exploration of the two levels of optimisation and has led to promising results on a series of over 40 test cases.

Global Optimisation of Dynamic Process Systems

Carlos Perez-Galvan, Bogle, CONACyT and UCL

In chemical process design it is often desired to know the optimal conditions for a given time-dependent process described by a system of ODEs. This is useful in applications such as parameter estimation, model predictive control and design of control systems, operating profiles of batch processes, etc.. The project aims to develop techniques that ensure that a globally optimal solution to problems such as maximising yield of a particular output variable or of minimising the cost associated with a particular disturbance also guarantees bounded performance across the whole dynamic trajectory.

For the dynamical optimisation problem there are several options to address the problem in a rigorous way. Three main steps are involved in the sequential approach in which finding efficient and tight bounds for the ODE system is the most challenging of them due to the fact that only low dimensional problems with small uncertainties can be solved with the available methods. Much work has been done in this aspect and improvements in methods such as Taylor models and McCormick relaxations have been devised. One of the main issues regarding the ODE integration is the overestimation generated in the so called validated methods. This overestimation is the product of the dependency problem and wrapping effect that arise in interval analysis. Consequently, this problem is preventing these methods to be used in higher dimensional problems besides it is also preventing the use of significant uncertainties in the ODE models.

We are exploring ways to reduce the overestimation when it has already been generated using interval analysis and interval contractors that help tackle the overestimation problem. Another alternative is the use of so-called consistency techniques. Alternatively we can deal with it before it happens by model reformulation, for example the dependency problem can be reduced by minimising the number of times a variable appears in a model. Having a tool able to systematically account for interval overestimation reduction strategies is a promising idea in the validated solutions of ODEs.

Improved Fleet Management Using Operations Research

Floudas, Gounaris, Repoussis, Tarantilis, Wiesemann

Fleet management is a business function that is concerned with the management of a company’s transportation fleet, including trucks, rail cars, ships and planes. Amongst the many goals of fleet management are the prudent investment in company vehicles, as well as the continuous improvement of the efficiency, effectiveness and safety of the existing fleet. It is estimated that with an effective fleet management system in place, companies can on average reduce their fleet size by 15-25%, reduce their maintenance costs by 10-20% and improve fuel consumption by 8-12% (Accenture: Federal Fleet Management).

Similar to other business functions, fleet management decisions are taken under considerable uncertainty about the current conditions and future market developments. Informed decision making in fleet management therefore requires the collection and analysis of data from multiple sources, such as inventory levels, customer demands, traffic conditions and the state of the current fleet. Using the data, (sets of) probability distributions can be constructed that truthfully reflect important characteristics of the involved business processes.

Decision-making under uncertainty has a long and distinguished history in operations research, with the origins dating back to the 50’s (Markov decision processes) and 60’s (stochastic programming). To date, the predominant paradigm is to model the uncertain data as random variables and then solve the decision problem by discretising the outcomes of these random variables. For multi-stage (i.e., dynamic) problems, this discretisation results in the classical curse of dimensionality, which implies that the computation times grow exponentially with problem size. This computational burden has been a major impediment to the applicability of these methods, and it became a wide-held belief that multi-stage decision-making under uncertainty is an inherently intractable endeavour.

Together with scientists from Athens University of Economics and Business, Carnegie Mellon University, Princeton University and Stevens Institute of Technology, researchers at the Sargent Centre for Processing Engineering are developing models and tools to determine prudent fleet management decisions that allow for probabilistic guarantees in view of the uncertainty about future customer demands and traffic conditions. The focus is on the development of methods that do not discretise the involved probability distributions. The resulting methods avoid the curse of dimensionality and thus scale to industry-size problems.

Multilevel Algorithms for Speeding up Molecular Dynamics Simulations

Chin Pang Ho, Cecilia Clementi, Charles Laughton, Panos Parpas

It is often possible to exploit the structure of large scale optimization models to develop algorithms with lower computational complexity. A noteworthy example are composite convex optimization models that consist of the minimization of the sum of a smooth convex function and a non-smooth (but simple) convex function. Composite convex optimization models arise often in a wide range of applications from computer science (e.g. machine learning), statistics (e.g the lasso problem), and engineering (e.g. signal processing), and computational chemistry (e.g. molecular conformation problems)

In addition to the composition of the objective function, many of the applications described above share another common structure. The fidelity in which the optimization model captures the underlying application can often be controlled. Typical examples include the discretization of Partial Differential Equations in optimal control, the number of features in machine learning applications, the number of states in a Markov Decision Processes, or the number of collective variables in a molecular dynamics simulation. Indeed anytime a finite dimensional model arises from an infinite dimensional model it is straightforward to define such a hierarchy of optimisation models. In many areas it is common to take advantage of this structure by solving a low fidelity (coarse model) and using the solution as the starting point in the high fidelity (fine) model.

Together with colleagues we have been working on an algorithmic framework in order to take advantage of the availability of a hierarchy of models in a consistent manner for a variety of classes of optimisation models. Recent work includes developments in nonsmooth optimisation, semidefinite programming and global optimisation.

Mixed-Integer Optimisation

Vivek Dua

The developments here include reformulation of mixed-integer optimisation problems has multi-parametric programs. This is achieved by relaxing the integer variables as continuous variables and then treating them as parameters. The optimal solution of the multi-parametric program is obtained as a function of the parameters. Fixing the parameters at the integer values provides a list of potential solutions from which the best or the set of best solutions is selected as the final solution. The main contribution is in not fully solving the multi-parametric programs and where possible exploiting the existence of specific types of nonlinearities.

Ion Transport Modelling for Cystic Fibrosis

Donal O’Donoghue, Vivek Dua, Guy Moss, Paola Vergani; CoMPLEX, UCL

Cystic fibrosis (CF) is genetic disease of the lung. We have developed a mathematical model of the ion transport for CF patients. This model shows that loss of apical chloride permeability alone can not explain the observed voltage data. An increased apical sodium permeability must also take place. This insight opens up new avenues for potential therapies for the CF.

.png)