3D scanning my head to further virtual reality research

I got my head 3D scanned as part of the Imperial-led SONICOM project - here's my experience and how it contributes to virtual reality research.

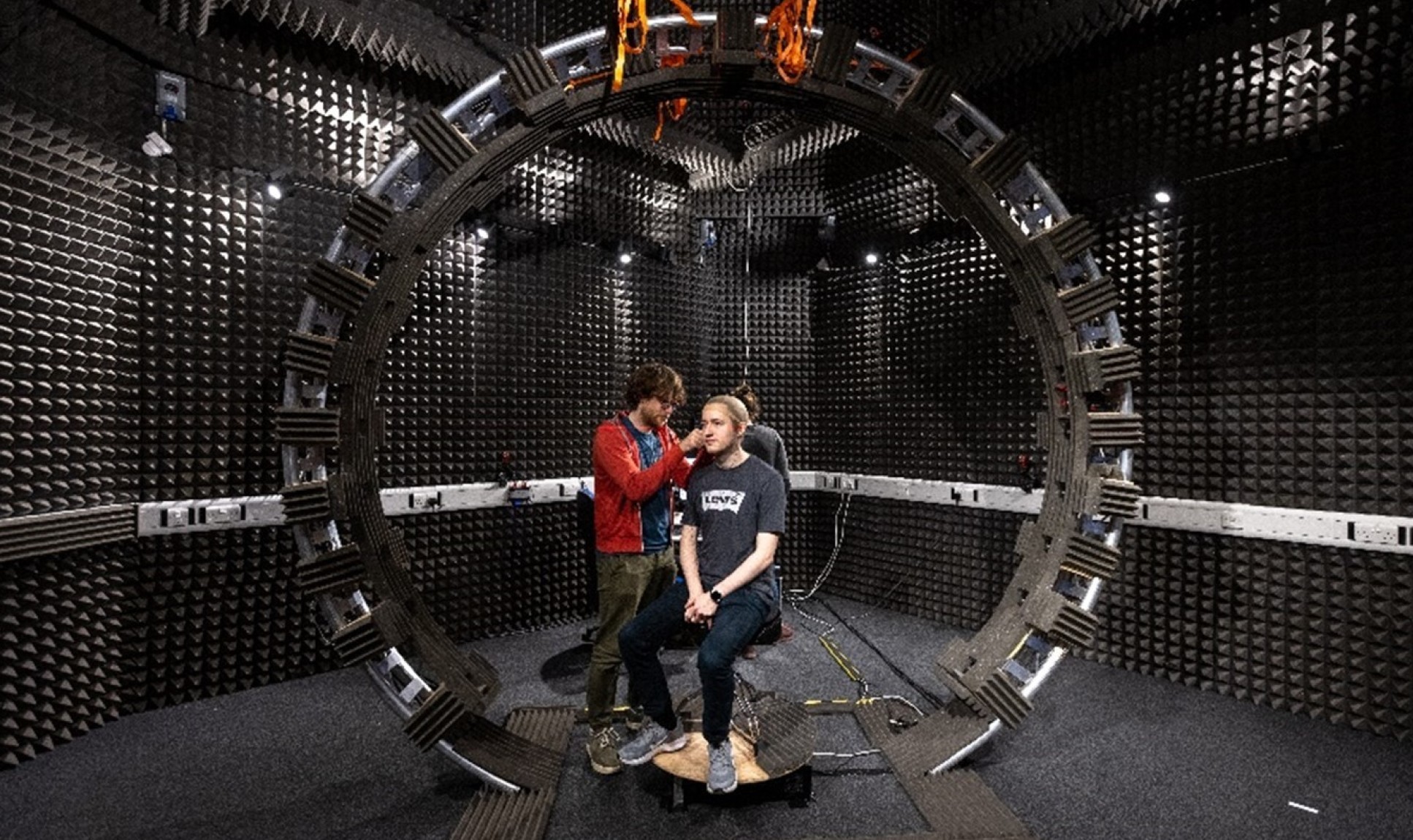

At the top of an ordinary metal staircase around the back of Imperial’s Dyson School of Design Engineering is a room unlike any other I’ve ever been in. Sitting in what feels like a sound-proofed chapel is a contraption that wouldn’t look out of place in a Star Trek episode.

This is the Turret Lab, a room kitted out especially for the SONICOM project as part of its research to develop immersive audio technologies for virtual and augmented reality (VR/AR). Coordinated by Imperial’s Dr Lorenzo Picinali, the Audio Experience Design team is looking to use artificial intelligence (AI) to develop tools that can personalise the way sound is delivered in virtual and augmented reality so that it is as similar to real-world sound as possible.

The SONICOM project is managed by Imperial's Research Project Management team, and as their new Science Communication Officer, I decided to volunteer as part of the study. What better way to get to know a project than to have a 3D rendering of your head created as part of it?

Measuring how my ears receive sound

I was greeted by SONICOM PhD student Rapolas Daugintis and postdoc Dr Aidan Hogg who guided me through what was going to happen. They invited me to sit on the chair in the centre of the apparatus that was apparently not an interdimensional gateway, but a device that would measure something called my ‘head-related transfer function’ (HRTF).

Basically, an HRTF is a mathematical way of describing the unique way someone’s ears receive sound. Because everyone’s heads, ears and shoulders are different sizes and shapes, the way that sound waves actually enter your ears is different from person to person.

Recording someone’s HRTF and using it when delivering sound through headphones is the key to making ‘binaural’ audio. This is the kind of audio we experience in real life where we can locate where a sound comes from, made possible by the fact that we have one ear on each side of our heads that allows us to notice slight differences in what they receive.

Using an HRTF means the headphones can reproduce ‘3D sounds’ that can appear like they’re coming from a specific direction, such as off in the distance above you or as if someone is whispering in your ear right behind you.

Apparently the process of measuring this complex mathematical function involves me putting a stocking on my head, sticking some microphones in my ears, and keeping very still whilst sitting on a chair that slowly rotates as sounds are played from loudspeakers all around me.

This might not sound like everyone’s idea of a good time, but the novelty of this bizarre series of events meant it was actually quite enjoyable. And at the end of it, the team had measured my own personal HRTF.

Matching the mathematical with the physical

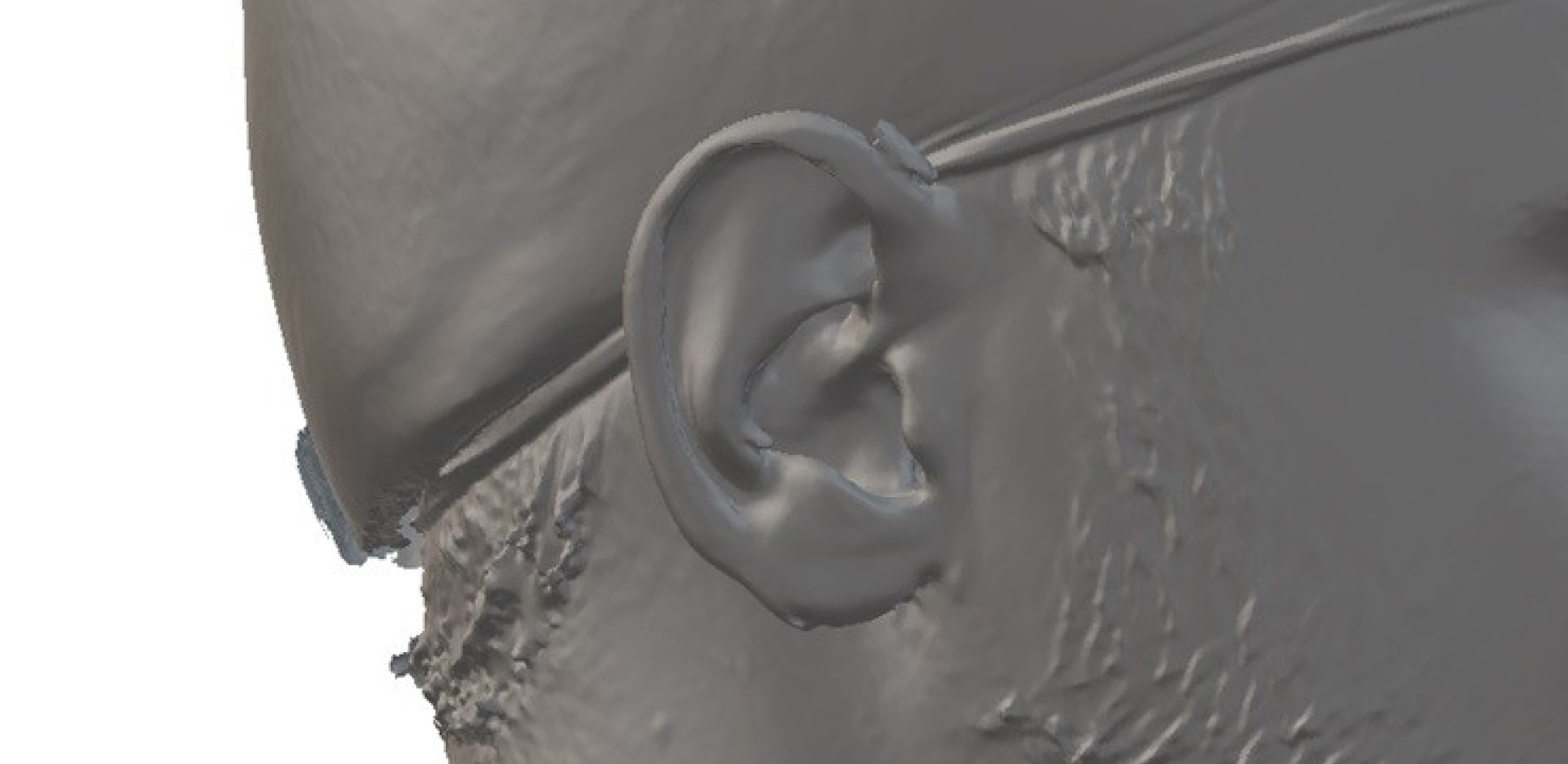

Measuring individual HRTFs is all well and good, and is the best way to deliver immersive binaural audio, but realistically, it’s not feasible for everyone to have their very own HRTF measured to achieve this. This is where a 3D scan of my head comes in.

SONICOM wants to connect the mathematical nature of HRTFs with the physical shapes of ears. This means, for example, that you could record your ear shape and then AI could use this to choose an HRTF that it believes would be closest to your own to deliver realistic sound.

This doesn’t mean everyone would need their personal 3D scanner either (although I now really want one). The team took a scan of my head with a handheld high-quality 3D scanner, but also took multiple pictures of my head from different angles with just a phone camera.

By linking all these different pieces of data together – HRTFs, 3D scans, and static photos – one day, someone could sign up to a virtual concert, snap a few photos of their ears, and AI could then make sure their experience sounds as similar to an in-person venue as possible.

The work that the members of SONICOM are doing is incredibly exciting, and if the past few years have proven anything, it’s that virtual experiences are here to stay – so let’s make them as realistic as possible.

Want to have your own HRTF recorded and head 3D scanned? The SONICOM project are always looking for new volunteers to add to their database! If interested, get in touch with Dr Picinali.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Harry Jenkins

Enterprise