Here, we describe one part of the MAESTRO backbone that we have focused on during the first phase of this project. Specifically, we describe the development of a bespoke platform that allows holistic sensing of patient, staff, and operating room.

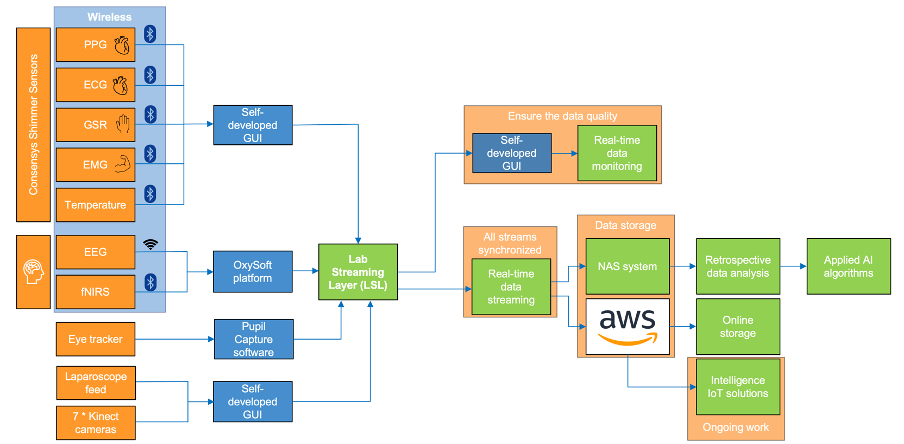

The schematic in Figure below provides an overview of the back-end MAESTRO platform. Multimodal sensor data-streams are collected over an interconnectivity backbone, anonymised and synchronised. Sensing modalities include: surgical camera video and first-person view video from wearable eye tracking glasses, several wireless wearable physiological sensors (PPG, ECG, GSR, EMG, skin temperature, eye-tracking and pupillometry), wireless functional neuroimaging sensors (EEG, fNIRS), and distributed depth cameras (RGB-D). Synchronised streams are transferred over a secure connection to the Cloud, where an AI continual learning process takes place for adaptation to new sites, partnering teams, ORs, and procedures.

Figure 2.1 Architecture (Back-end) of the MAESTRO platform

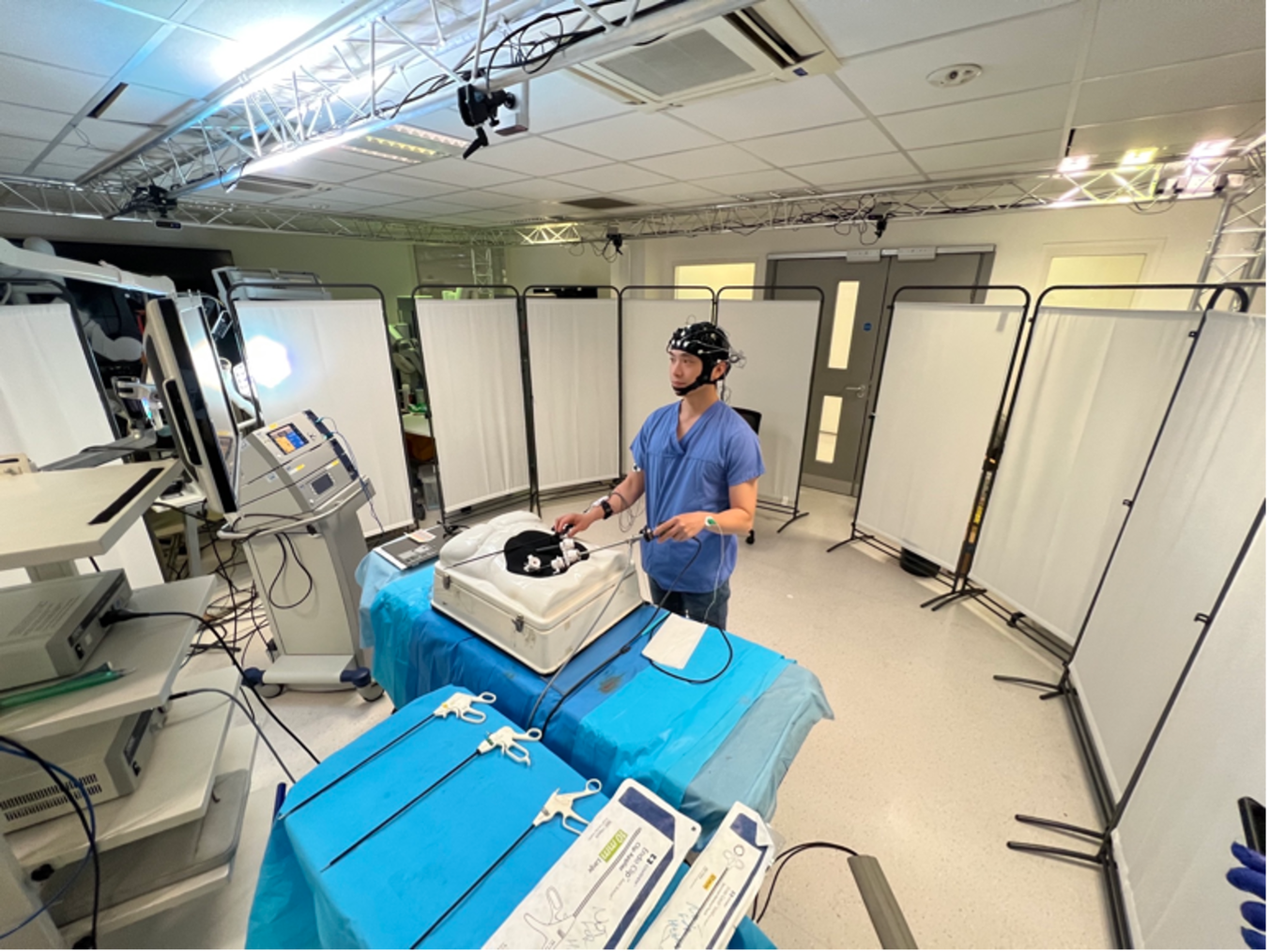

Figure 2.2 The simulated OR at St. Mary’s Hospital used to develop and validate the MAESTRO platform

2.1 Sensors

A collection of sensors was specifically selected to capture physiological data of the surgeon as well as a specialised form of video feed from the operating room environment as well as the surgical procedure itself. These sources of sensing data can be divided into the three main categories listed below.

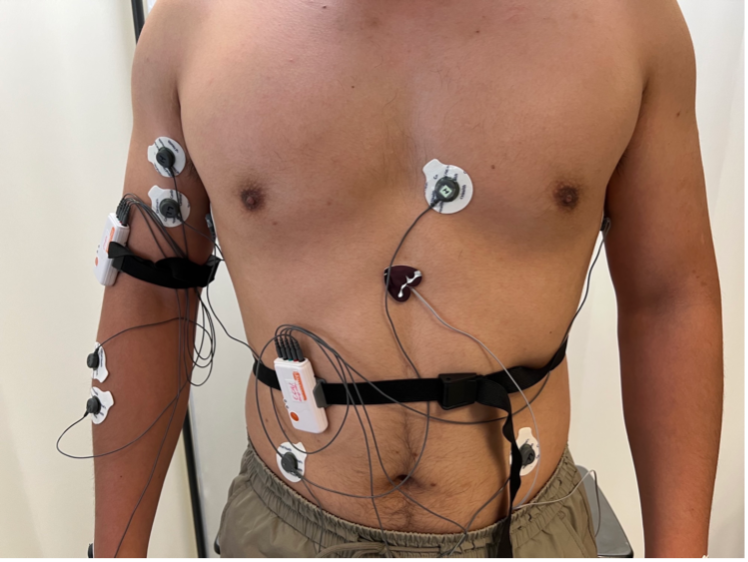

1. General physiological signals, including eye trackers, PPG, ECG, GSR, EMG, body temperature. These can potentially be used to measure surgeon mental workload, comfort levels, fatigue levels and gaze/eye movements.

Figure 2.3 Positioning of the electrodes for electrocardiogram (ECG) measurement used in MAESTRO.

2. Neurophysiological signals include EEG and functional Near Infrared Spectroscopy (fNIRS). These can be used to directly measure the blood flow and electrical activity of the surgeon’s brain during a task.

Figure 2.4 Left side view of the EEG & fNIRS sensory system used in MAESTRO.

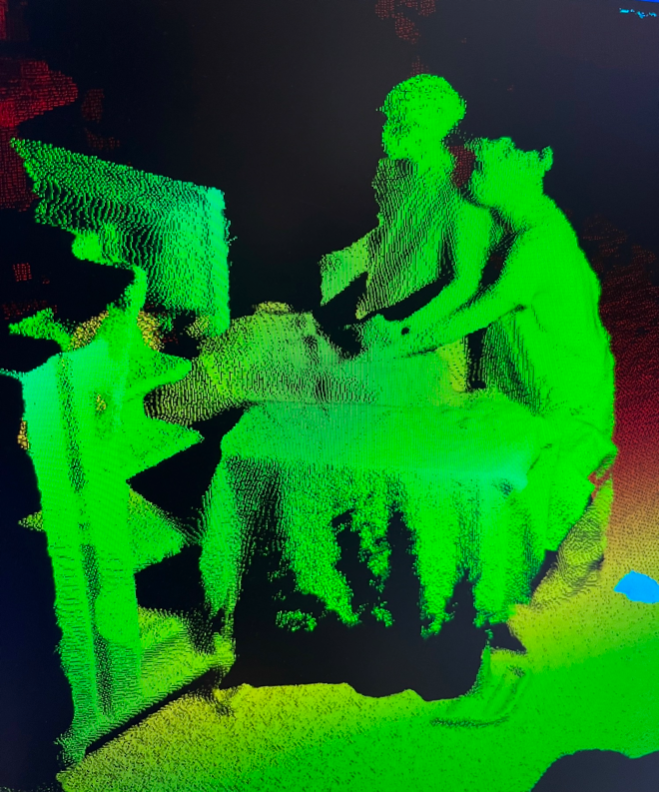

3. Operating room-wide video capture, including video recorded by the eye tracker’s scene camera, laparoscopic video feed, and RGB-D video (i.e., images and corresponding pixels 3D depth). The eye tracker records first person images, a screen recorder is used to capture laparoscopic feed video data and Kinect cameras surrounding the simulated theatre to capture motion and depth information.

Figure 2.5 Cloud of points generated for one Scene in the Operating Theatre

2.2 Data collection and streaming

Data from all the modalities mentioned above are initially recorded by either their native software or our self-developed platforms. One challenge lies in synchronising and streaming this data onto a single platform. We have used a software platform called Lab Streaming Layer (LSL) in order to achieve this.

Two types of data are stored in the LSL platform. Physiological and neuroimaging signals are directly stored in LSL as time-series data. In terms of image data, the frame number and corresponding time stamp are stored in LSL to facilitate synchronisation of data streams.

While collecting data with all these sensors and cameras, it is crucial to know if there are any drops in signals or if there are any sensor connection concerns. We have therefore developed a special graphical user interface (GUI) which provides us with a real-time stream of this information.

2.3 Data storage

We store all the data in our Network Attached Storage (NAS) system. Data analysis and AI algorithms can be implemented by accessing data in the NAS system.

In addition to the local storage using a NAS system, we have extensively collaborated with Amazon Web Services (AWS) to facilitate secure online storage. We are also currently working with AWS to be able to store and analyse data in an efficient manner and work to deploy this across many secure networks or operating rooms.

2.4 Technical specifications

These are the technical specification of the current sensors used with the MAESTRO platffrom

Table 2.4. Data Technical Specifications and Sensors

|

Sensors |

Manufacturer |

Specifications |

Connection |

Sampling rate |

|

ECG |

Shimmer |

Four-lead |

Bluetooth |

512 Hz |

|

PPG |

Shimmer |

1 channel |

Bluetooth |

|

|

GSR |

Shimmer |

2 channels |

Bluetooth |

|

|

EMG |

Shimmer |

2 channels |

Bluetooth |

|

|

Body Temp |

Shimmer |

1 channel |

Bluetooth |

|

|

EEG |

TMSi |

32 channels |

Wi-Fi |

|

|

fNIRS |

Artinis |

22 channels |

Bluetooth |

|

|

Laparoscope video feed |

|

|

Wired |

30 FPS |

|

Kinect cameras |

Microsoft |

7 views |

Wired |

30 FPS |

|

Eye-tracker |

Pupil-Lab |

2 eye cameras, 1 FPV |

Wired |

|

Contact Us

The Hamlyn Centre

Bessemer Building

South Kensington Campus

Imperial College

London, SW7 2AZ

Map location